Building GraphPU: A Large-scale 3D GPU Graph Visualization Tool

The project is now open source! Visit GitHub (github.com/latentcat/graphpu) to access GraphPU source code and download the software (Windows / macOS).

Research Motivation

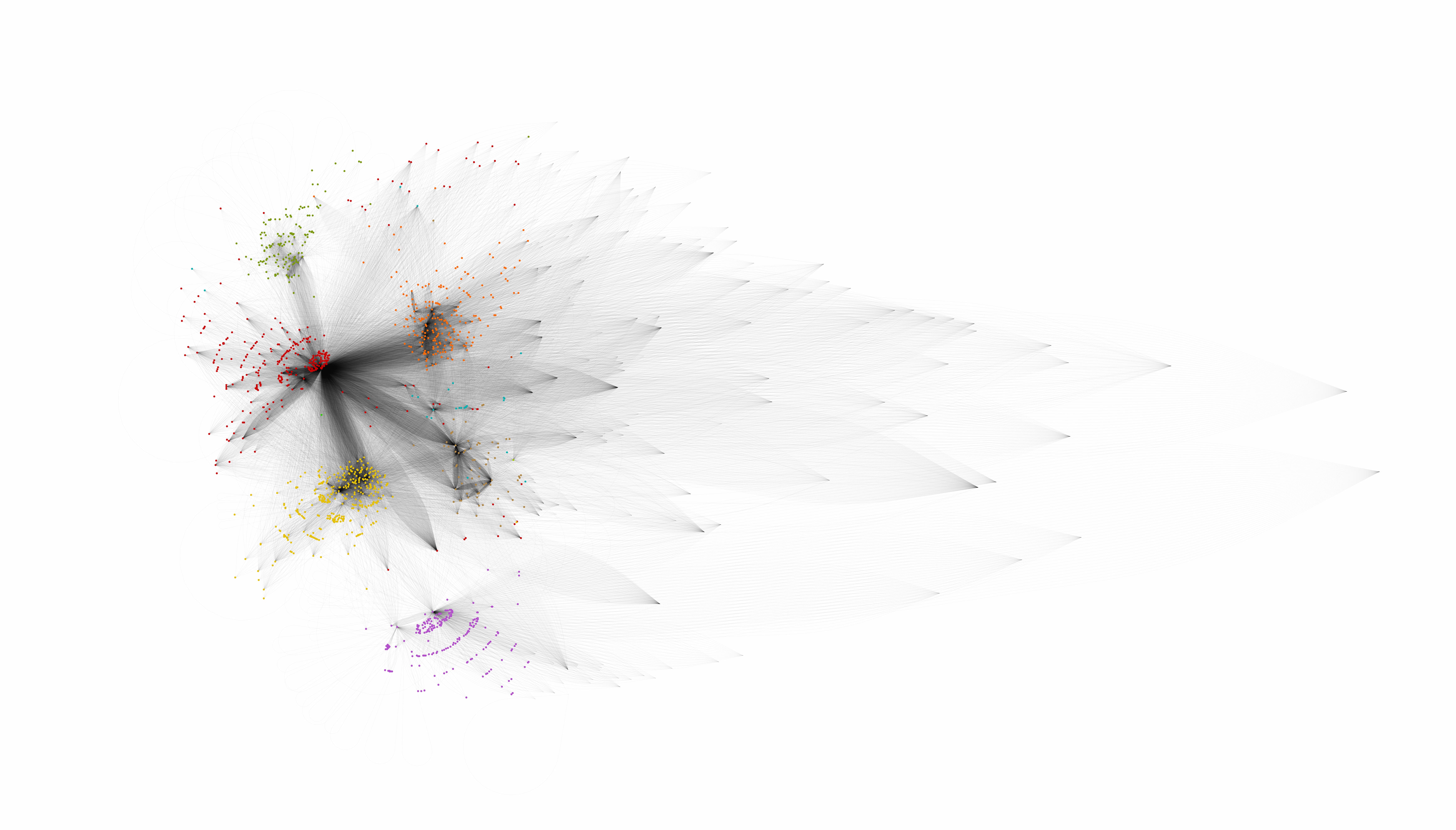

During my undergraduate studies, I learned about Barabási's research on scale-free networks, which sparked my interest in complex networks and social networks. At that time, I crawled the like data from my WeChat Moments of a thousand friends and visualized the friendship network using Mathematica.

The most fascinating part was that in Mathematica's visualization, my friends were grouped into different color clusters. When I checked the names in each cluster, I discovered that these groups perfectly corresponded to my elementary and middle school classmates, high school classmates, college classmates, online friends, and individually acquainted friends. Remarkably, the input data for the visualization contained no such labels - only information about who liked whose posts.

This sparked my interest in graph visualization, and I began to wonder what larger graphs would look like. For instance, what would the network of 1 billion WeChat users look like? How would it differ from the networks of hundreds of millions of Xiaohongshu and Weibo users?

This curiosity drove me and my fellow students, ![]() CPunisher,

CPunisher, ![]() Shichen, and

Shichen, and ![]() KirinoMizu, to develop GraphPU, a 3D GPU-accelerated graph visualization tool capable of visualizing millions of nodes.

KirinoMizu, to develop GraphPU, a 3D GPU-accelerated graph visualization tool capable of visualizing millions of nodes.

Existing Tools

There are many graph visualization software options, such as Gephi, Cytoscape, and the aforementioned Mathematica. However, they typically cannot handle graphs with more than 10,000 nodes. This limitation exists because their distribution simulation algorithms are usually based on CPU multi-threading, which not only leads to many serial dependencies during simulation but also causes significant frame delays due to extensive data transfer between CPU, RAM, VRAM, and GPU during rendering.

Moreover, these tools are primarily designed to help data scientists conduct specific analyses and research, so they usually simulate distributions in 2D space. However, I wanted audiences to have a more intuitive understanding of graphs, which is why we needed real-time simulation and rendering in three-dimensional space.

Unity Implementation

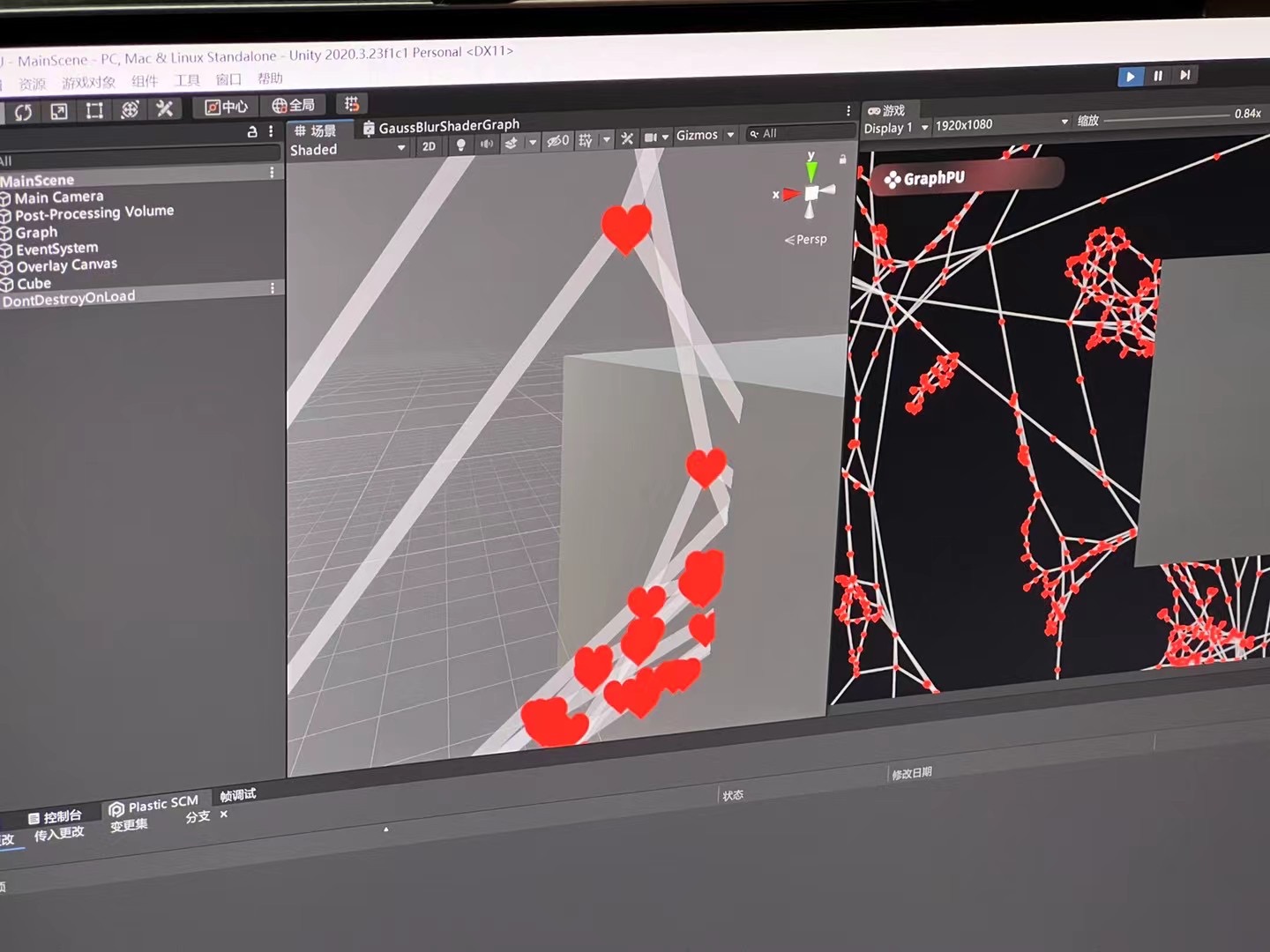

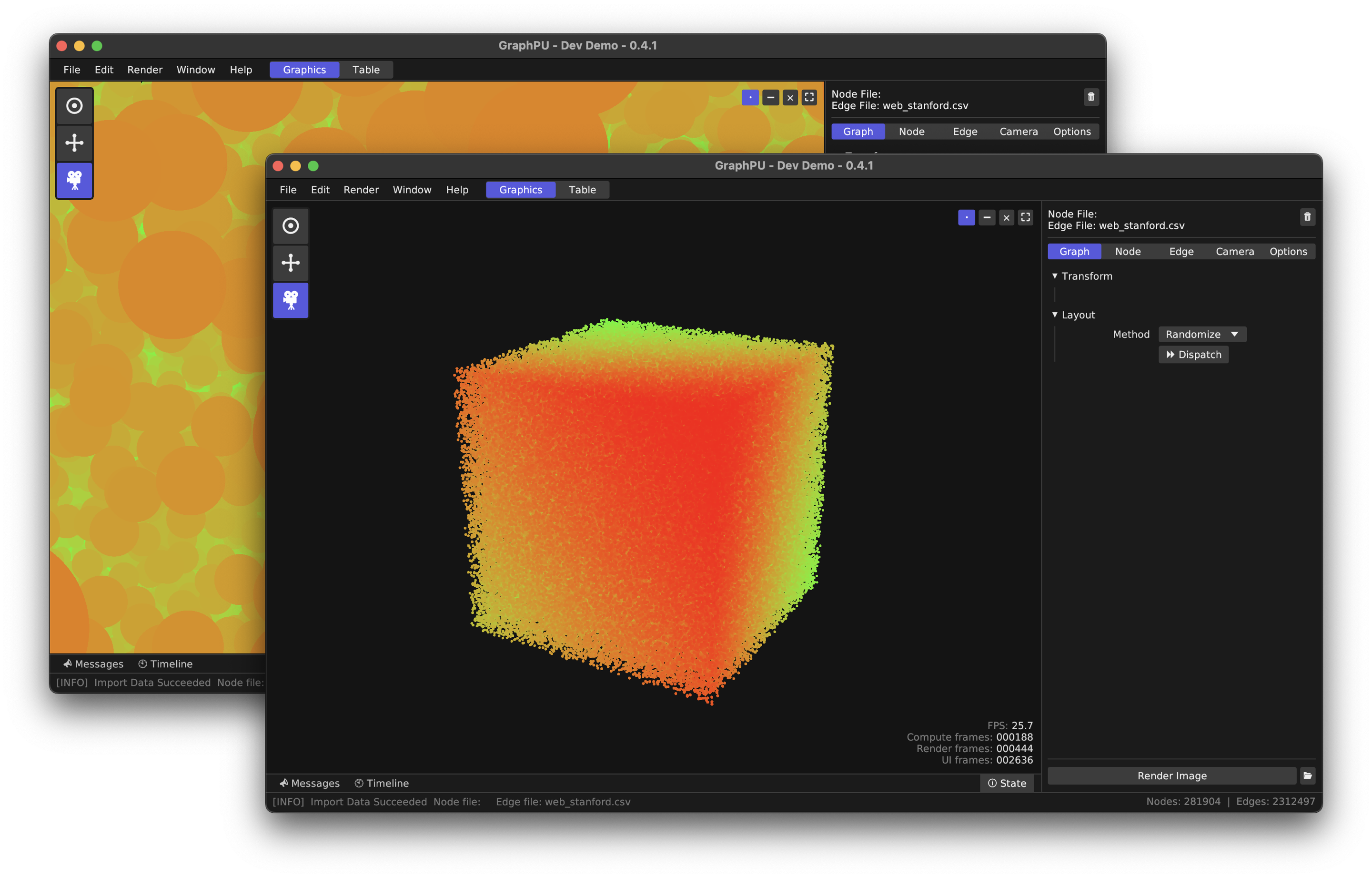

I built the prototype in Unity. Unity provides excellent abstraction and encapsulation for Compute Shaders, allowing flexible algorithm development and debugging.

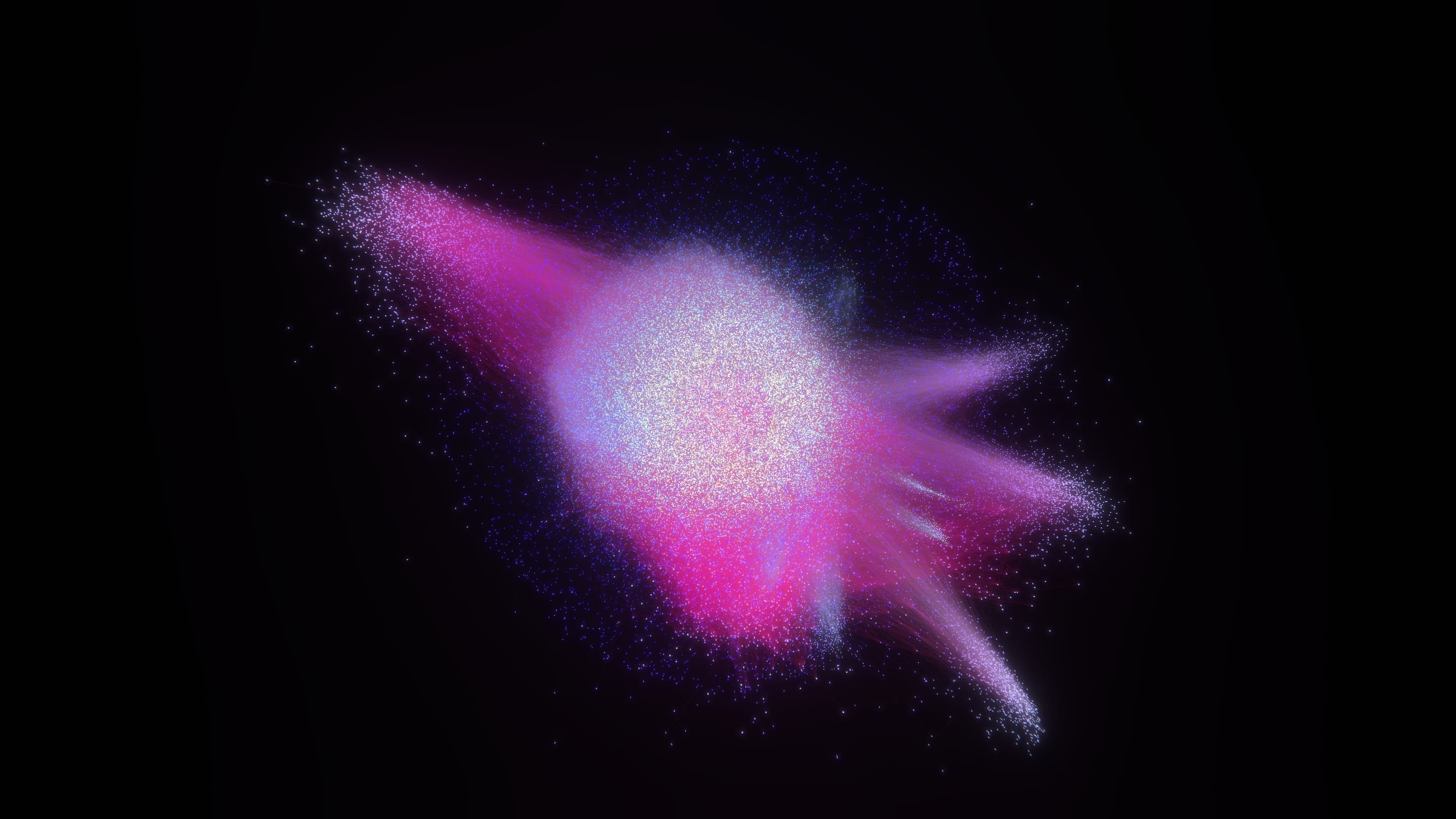

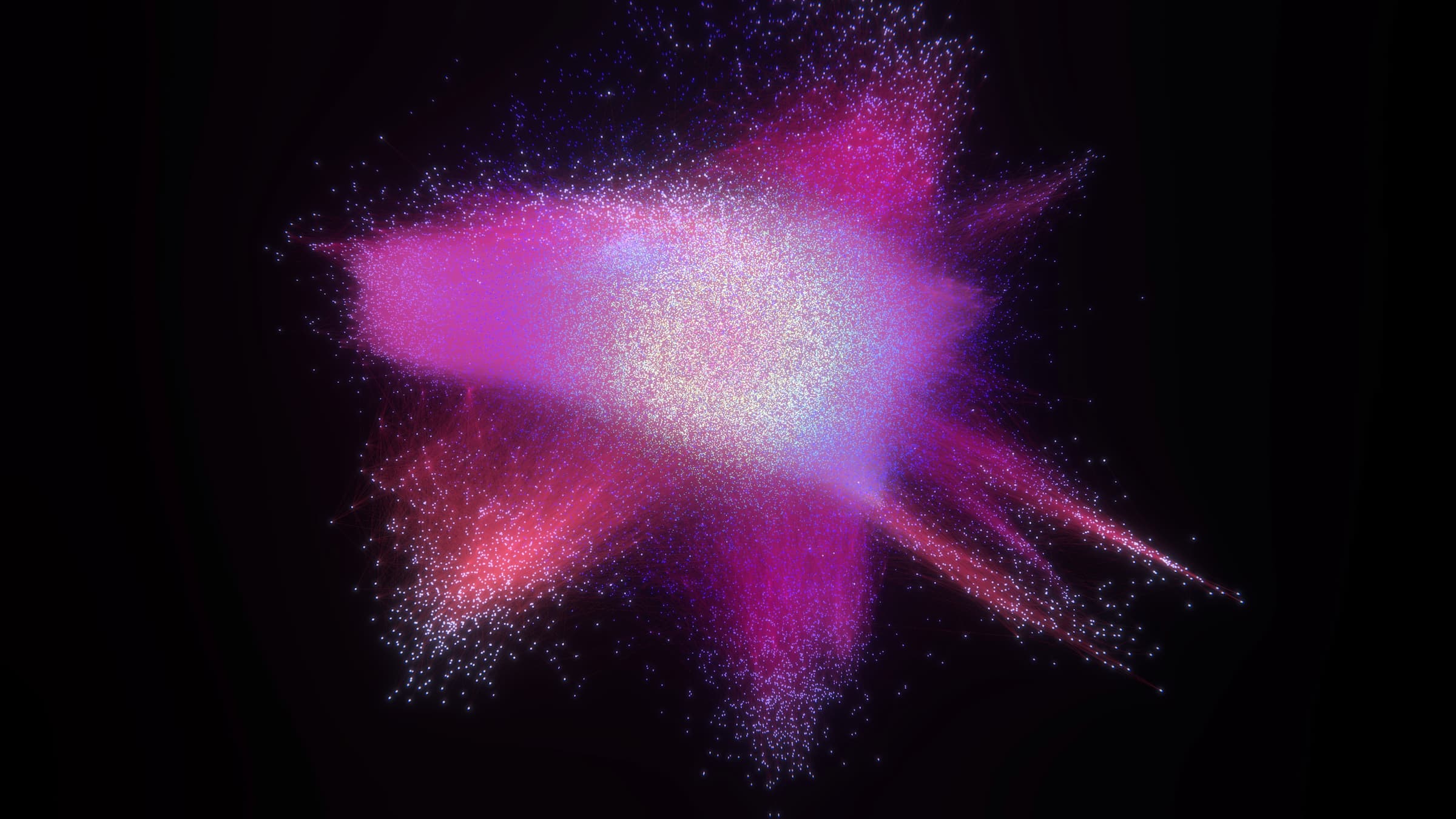

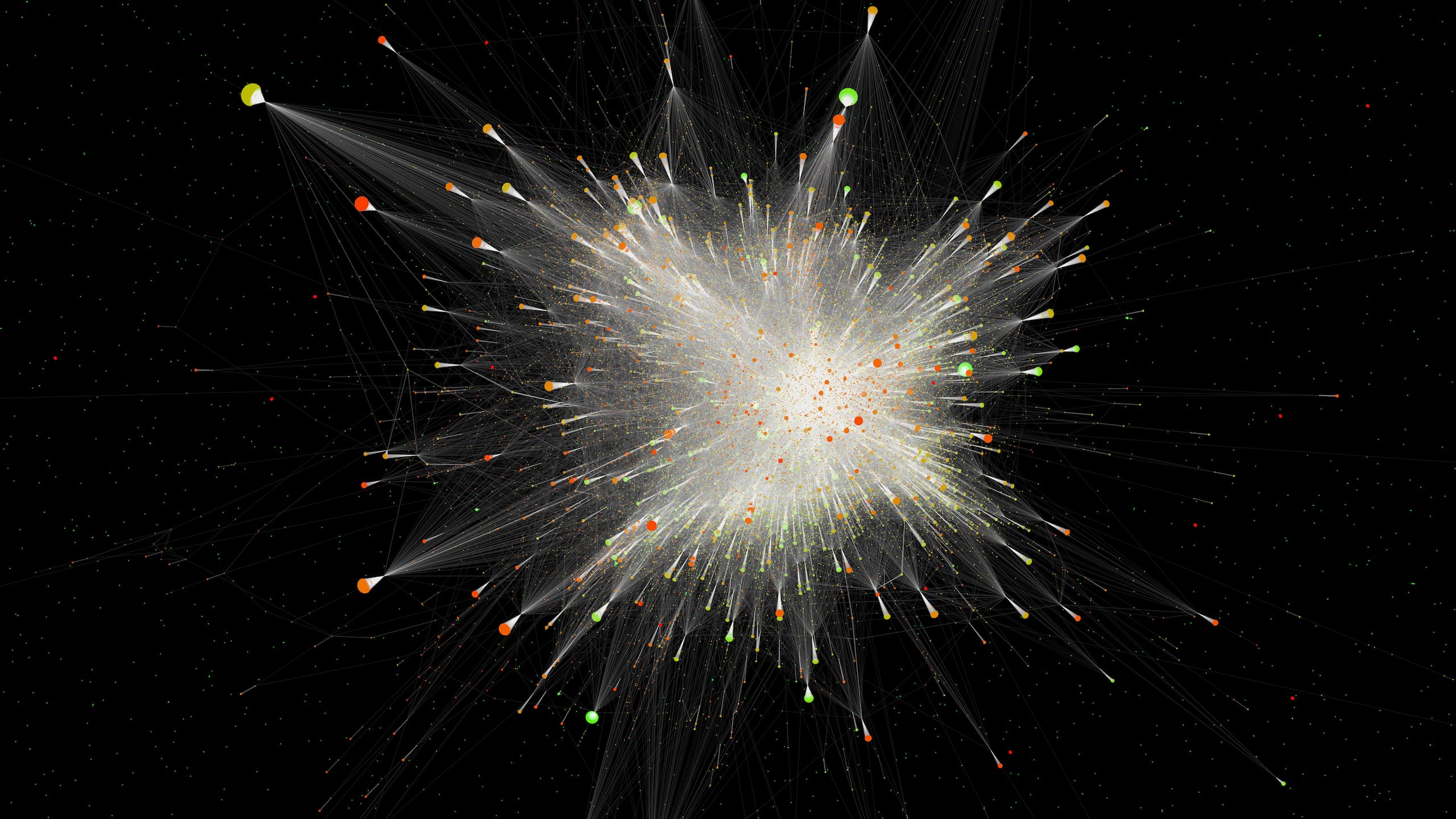

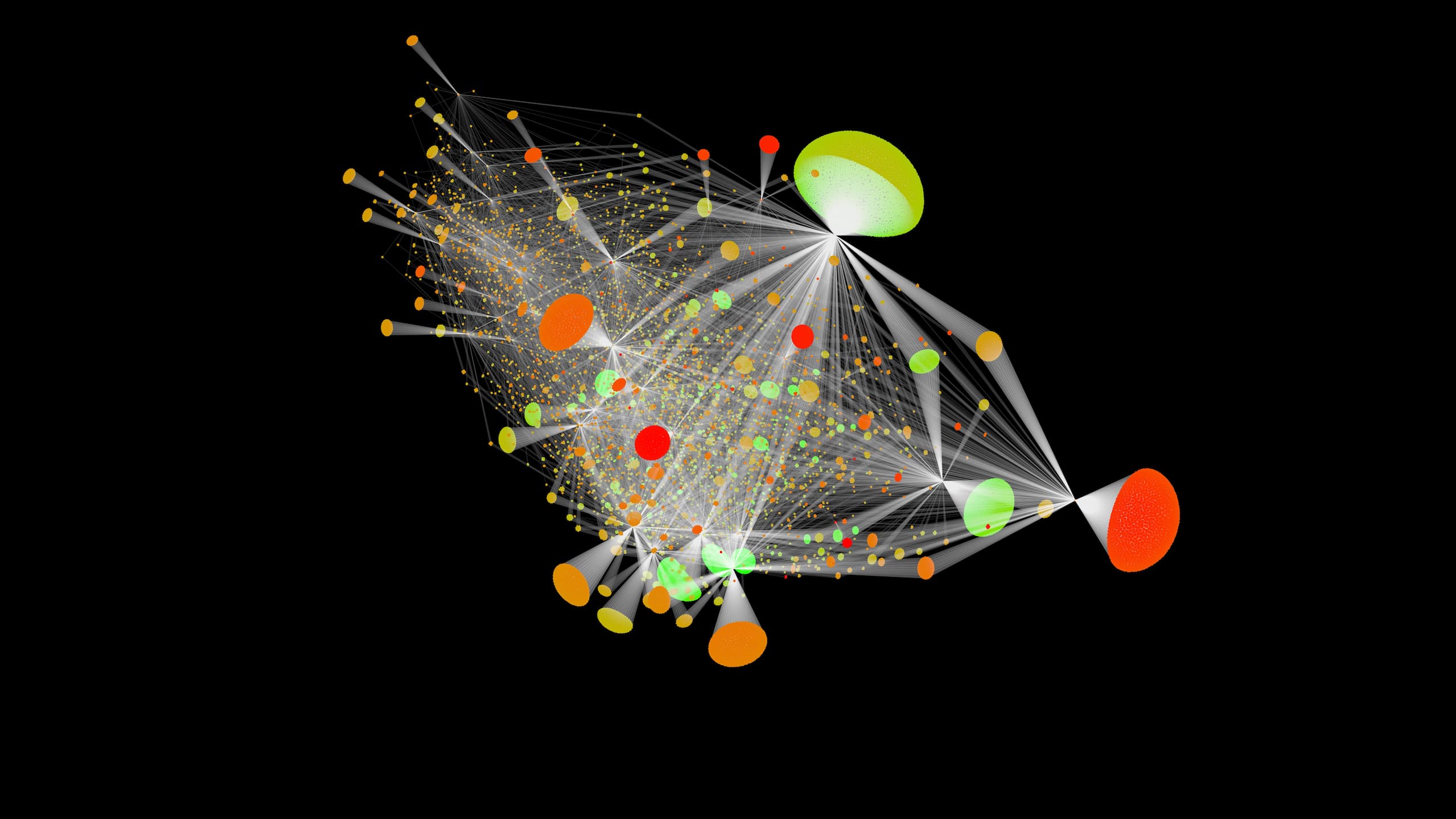

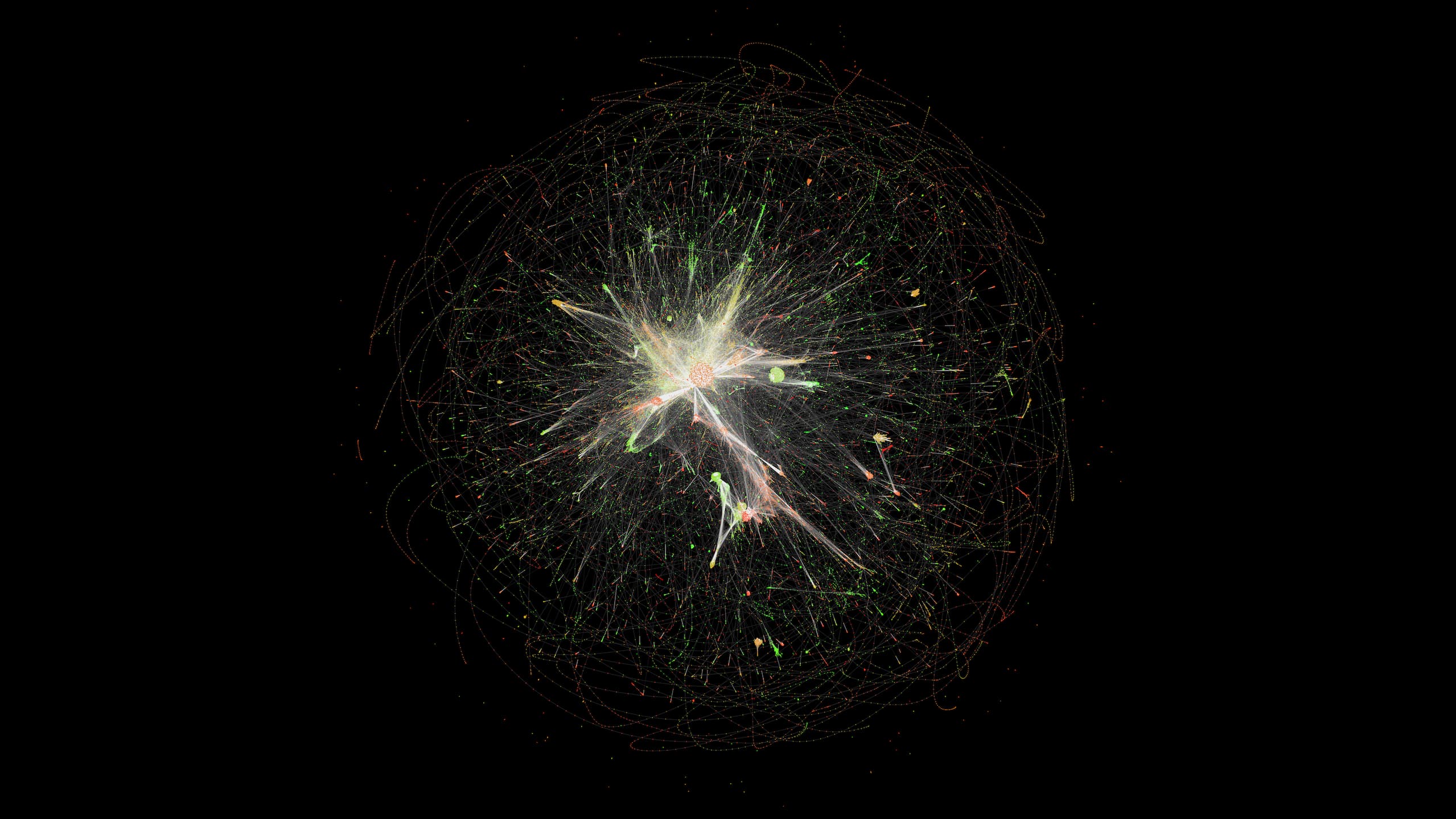

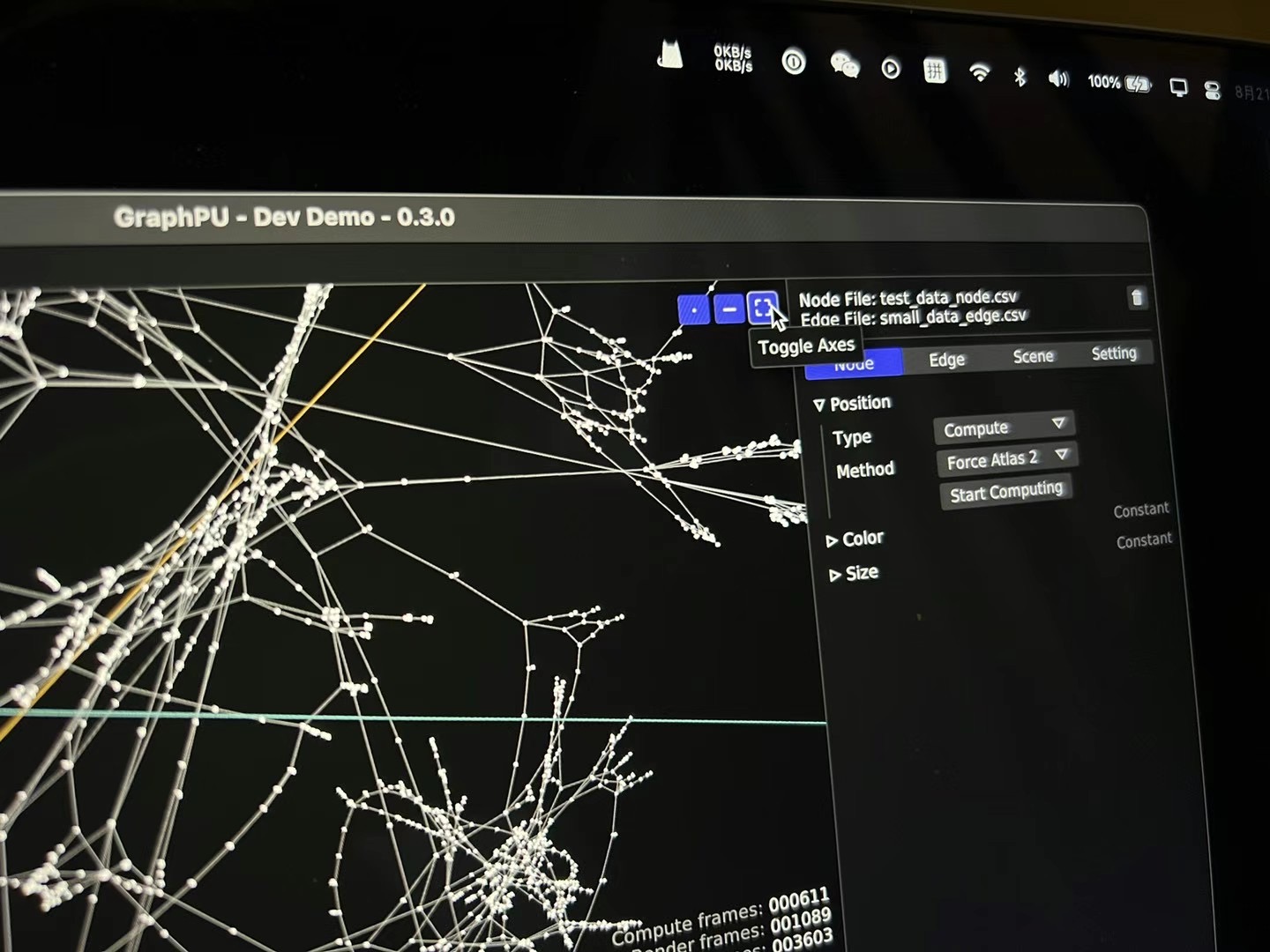

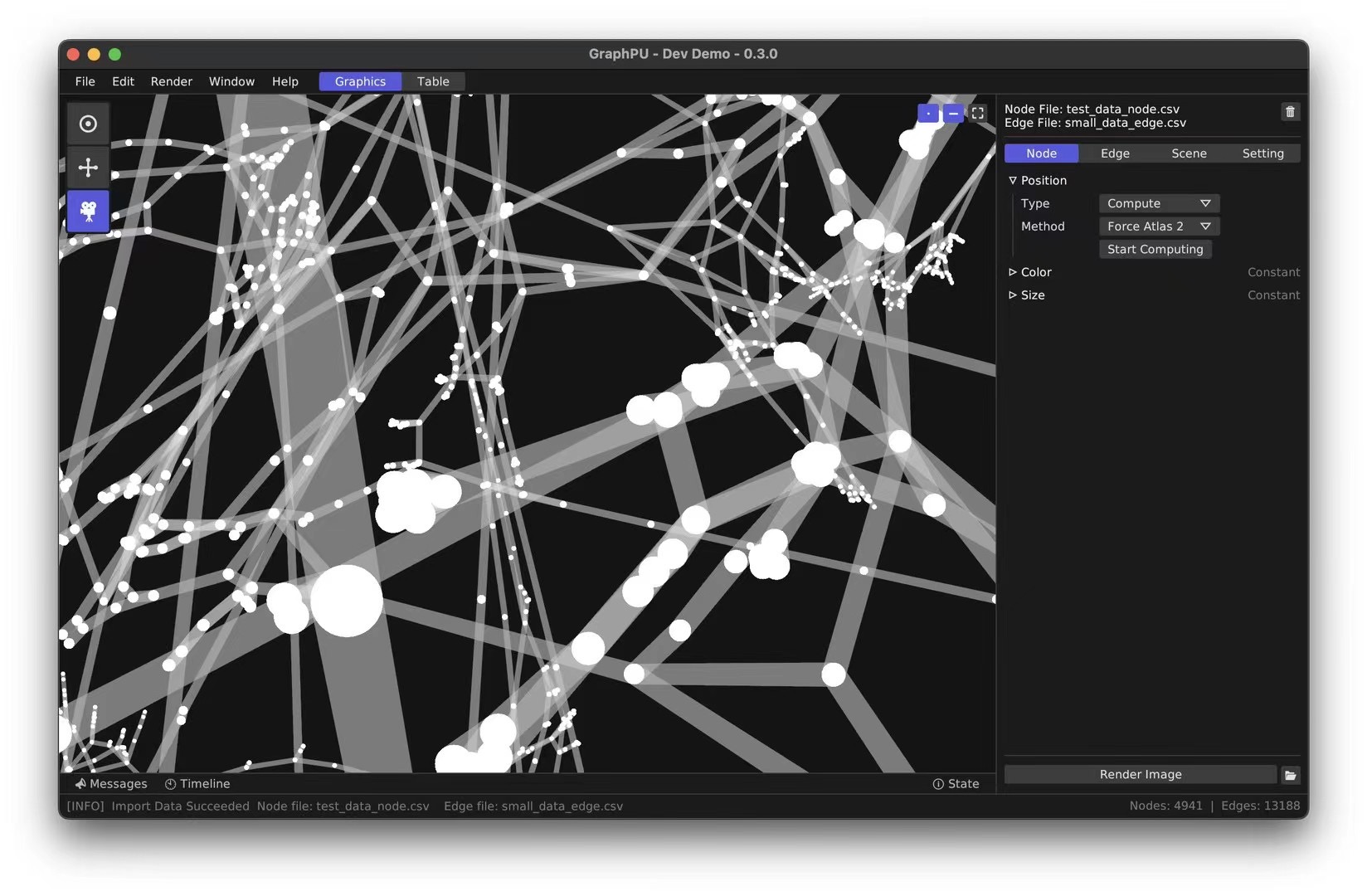

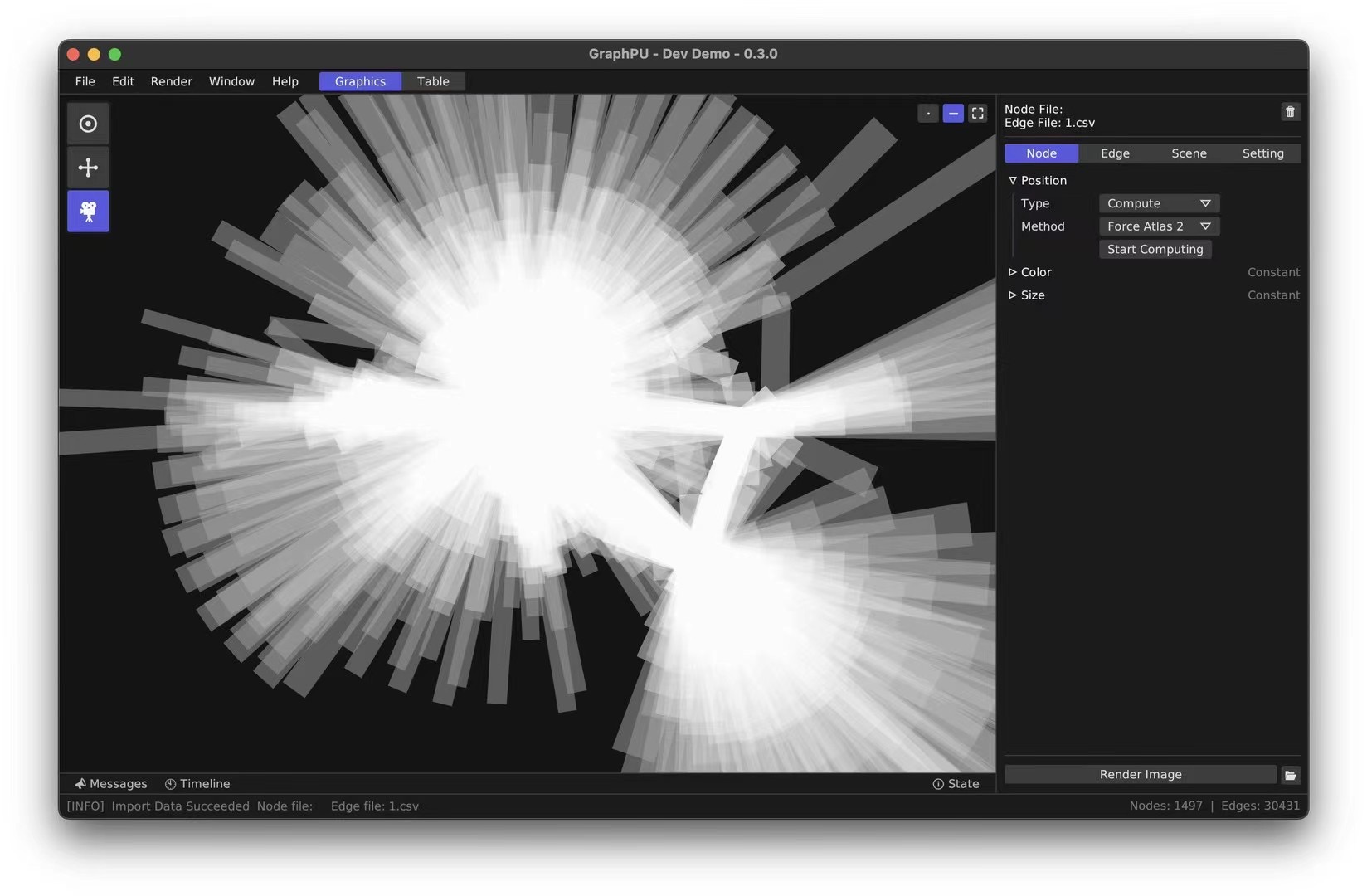

After a month of intense work, I achieved these stunning visualization results.

Rust Implementation

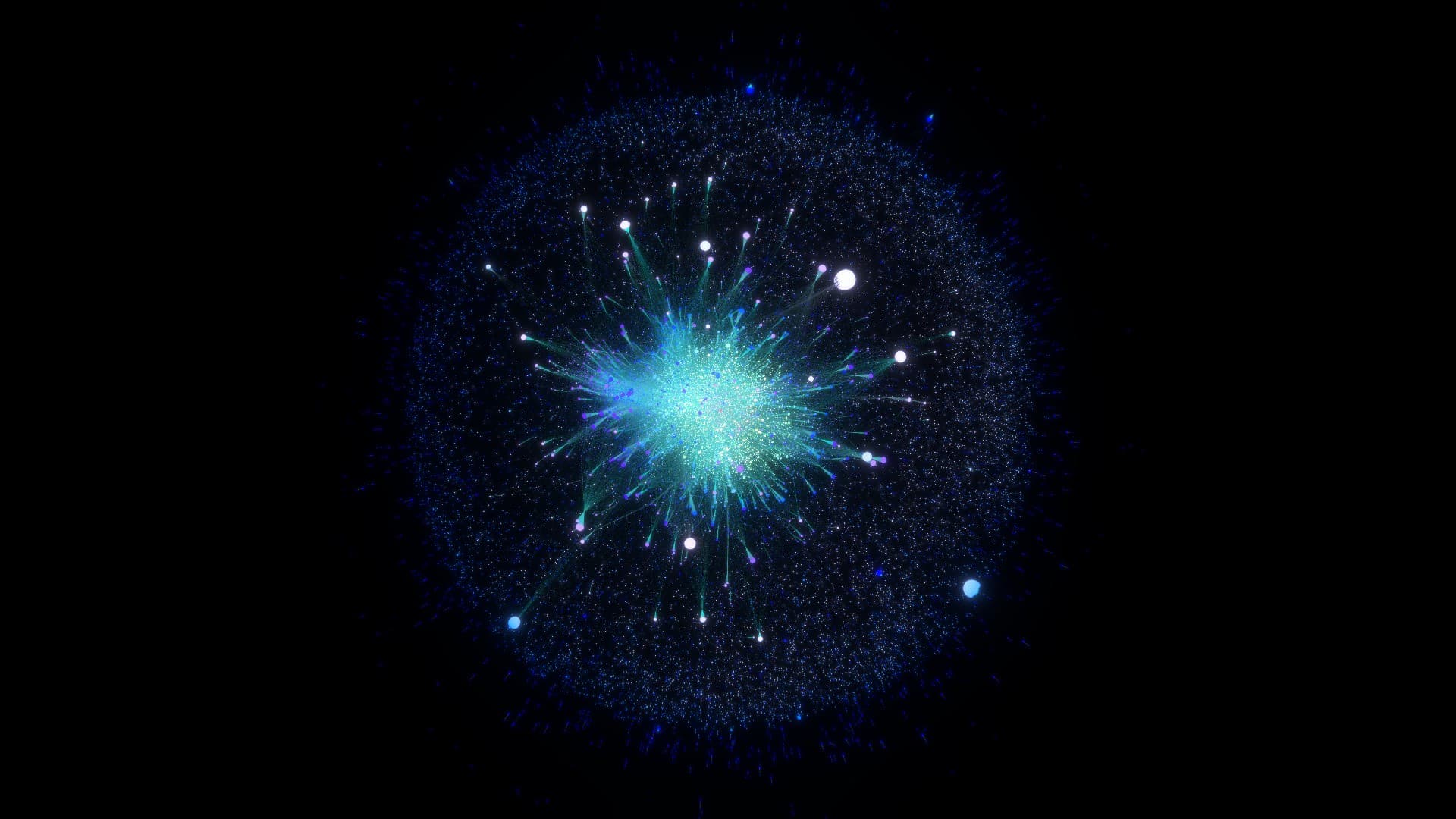

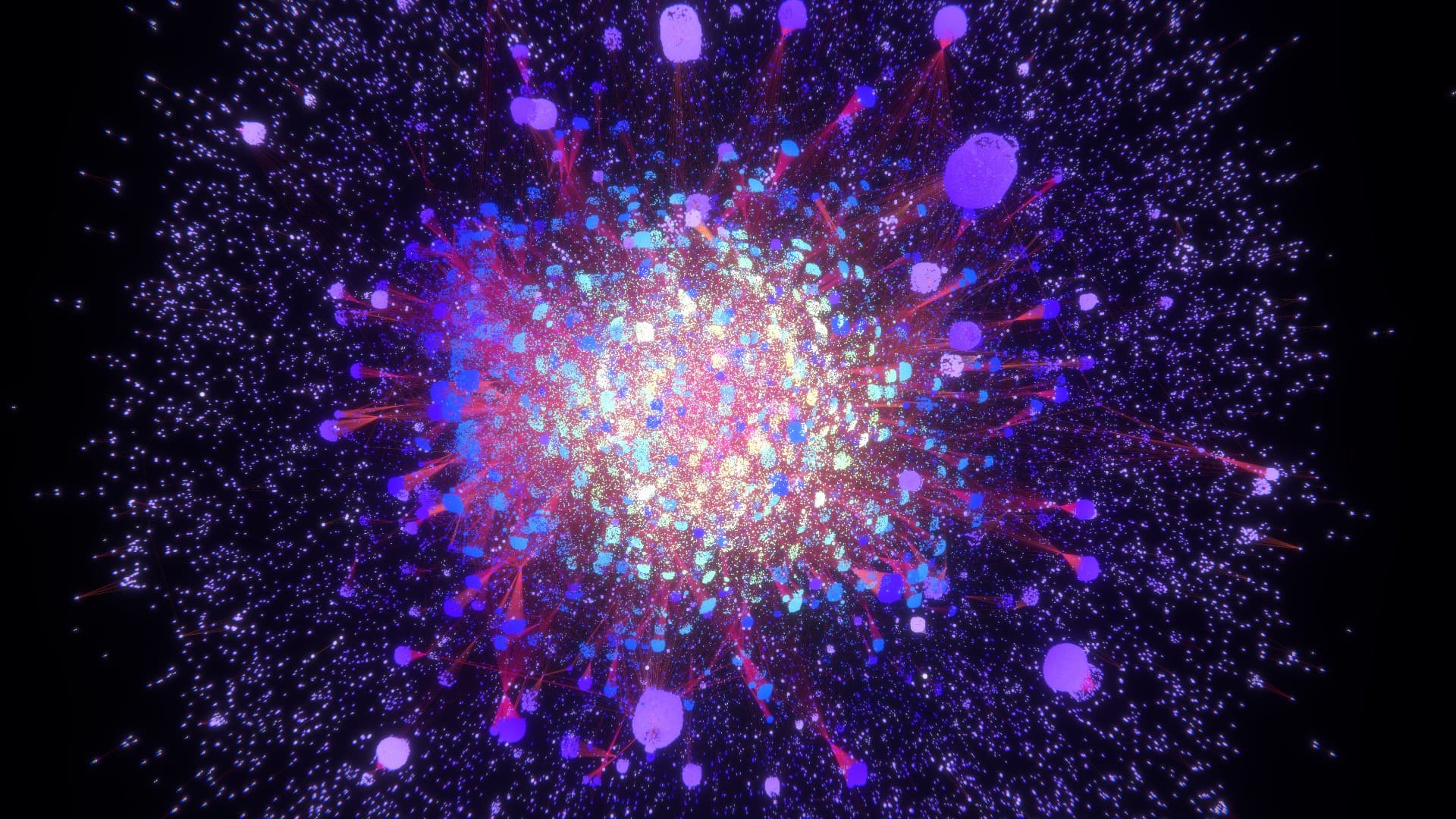

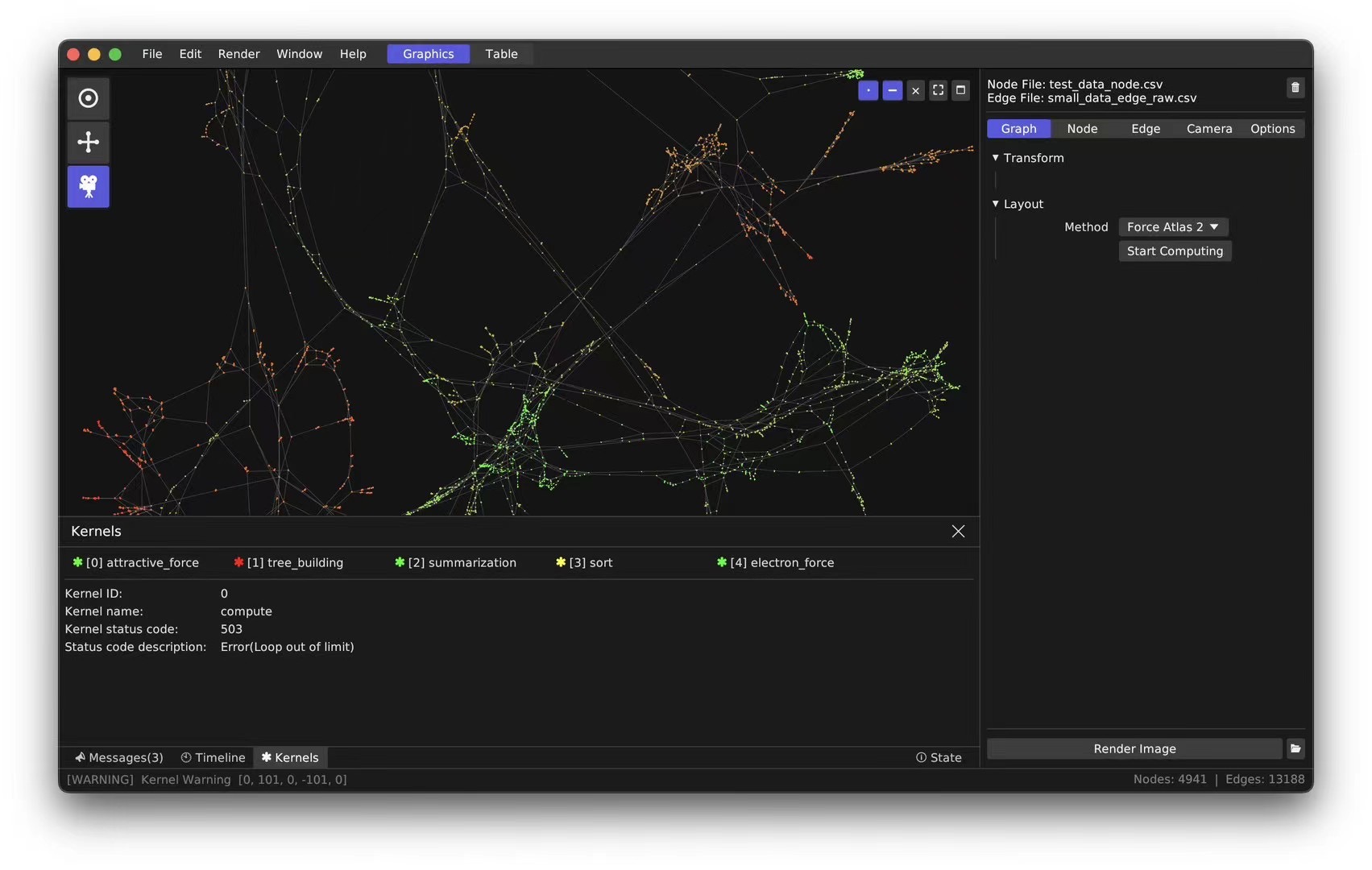

As development progressed, Unity's limitations became apparent, such as difficulties in developing tool UI and strict frame locking.

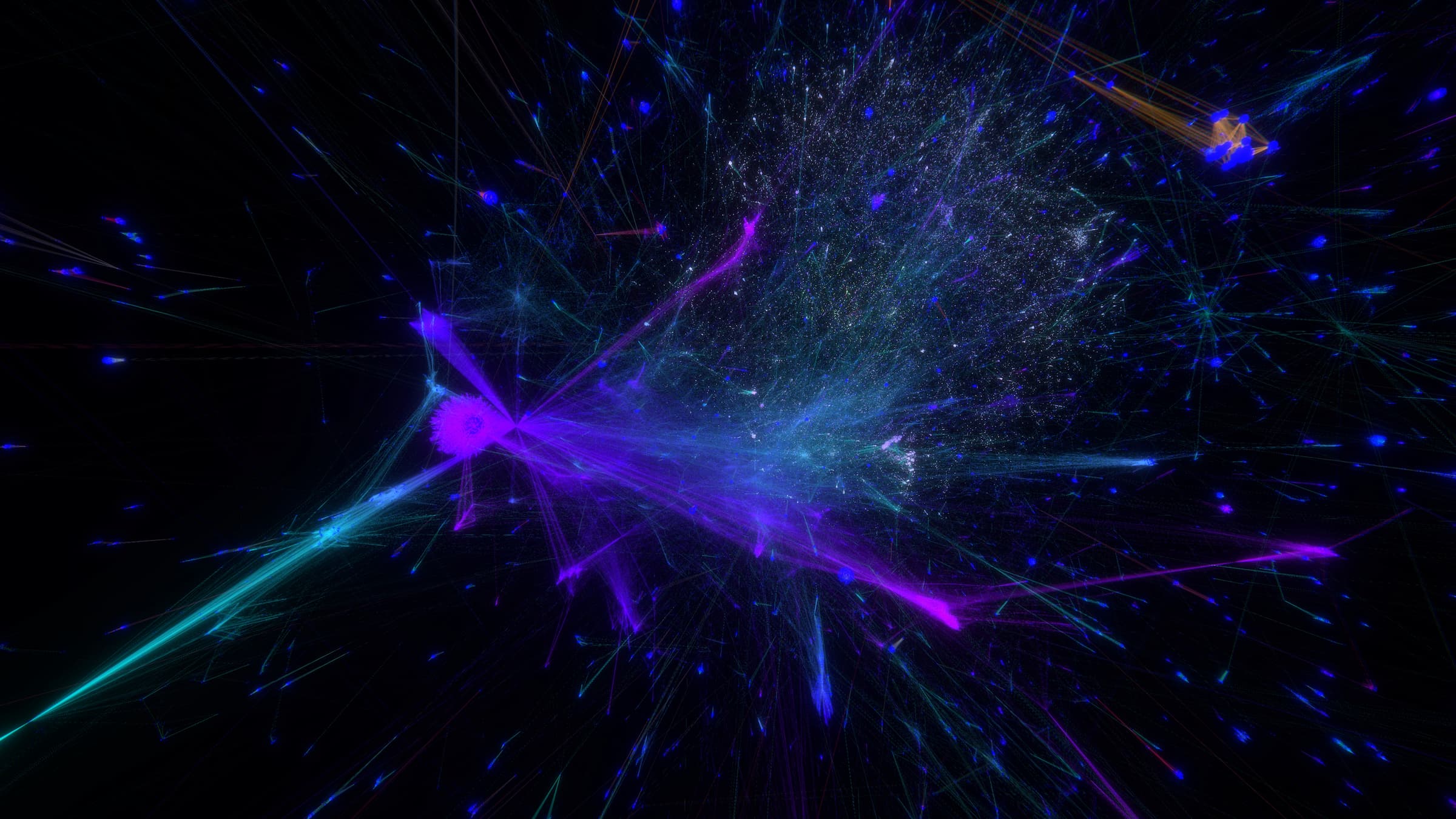

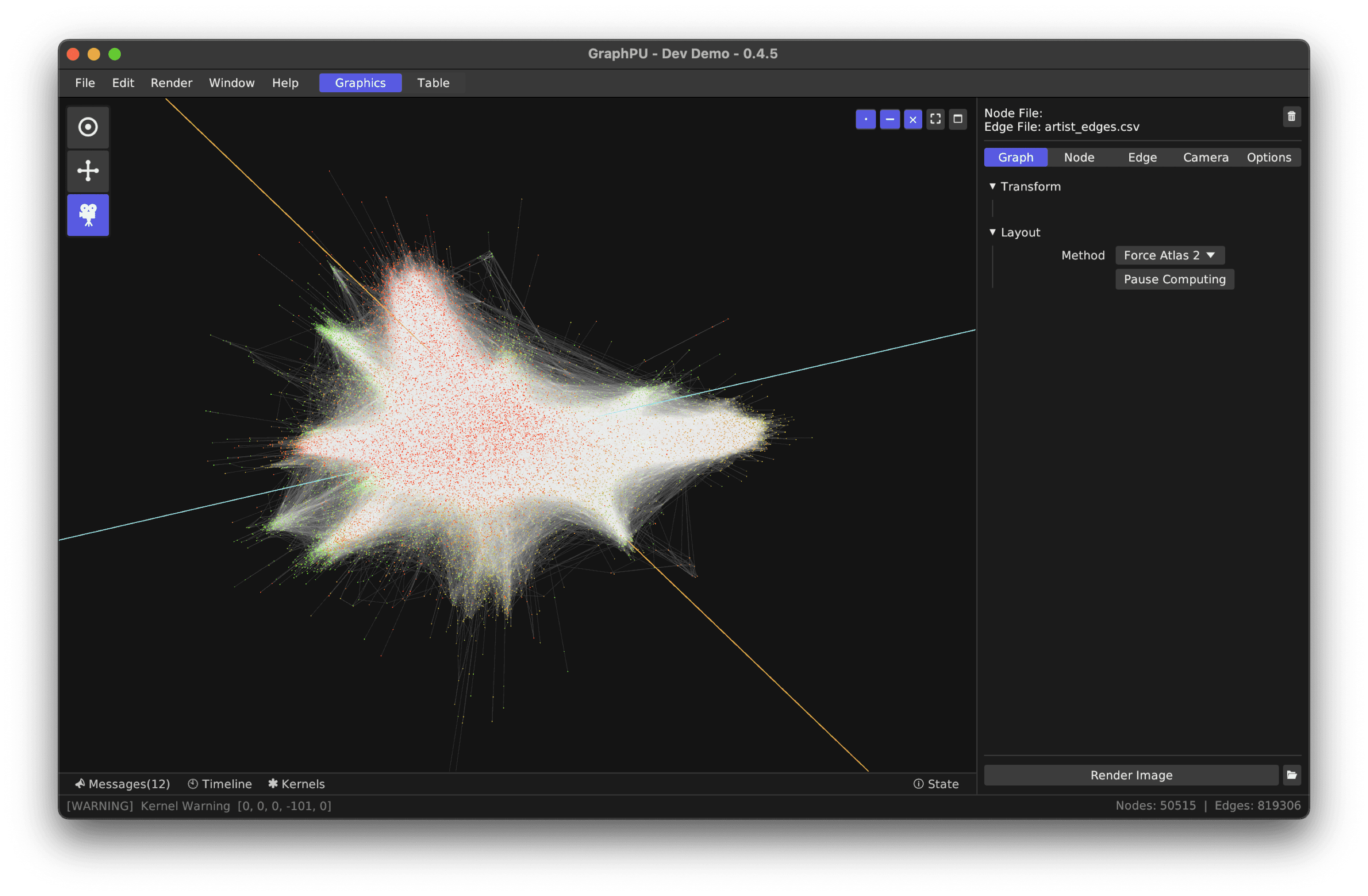

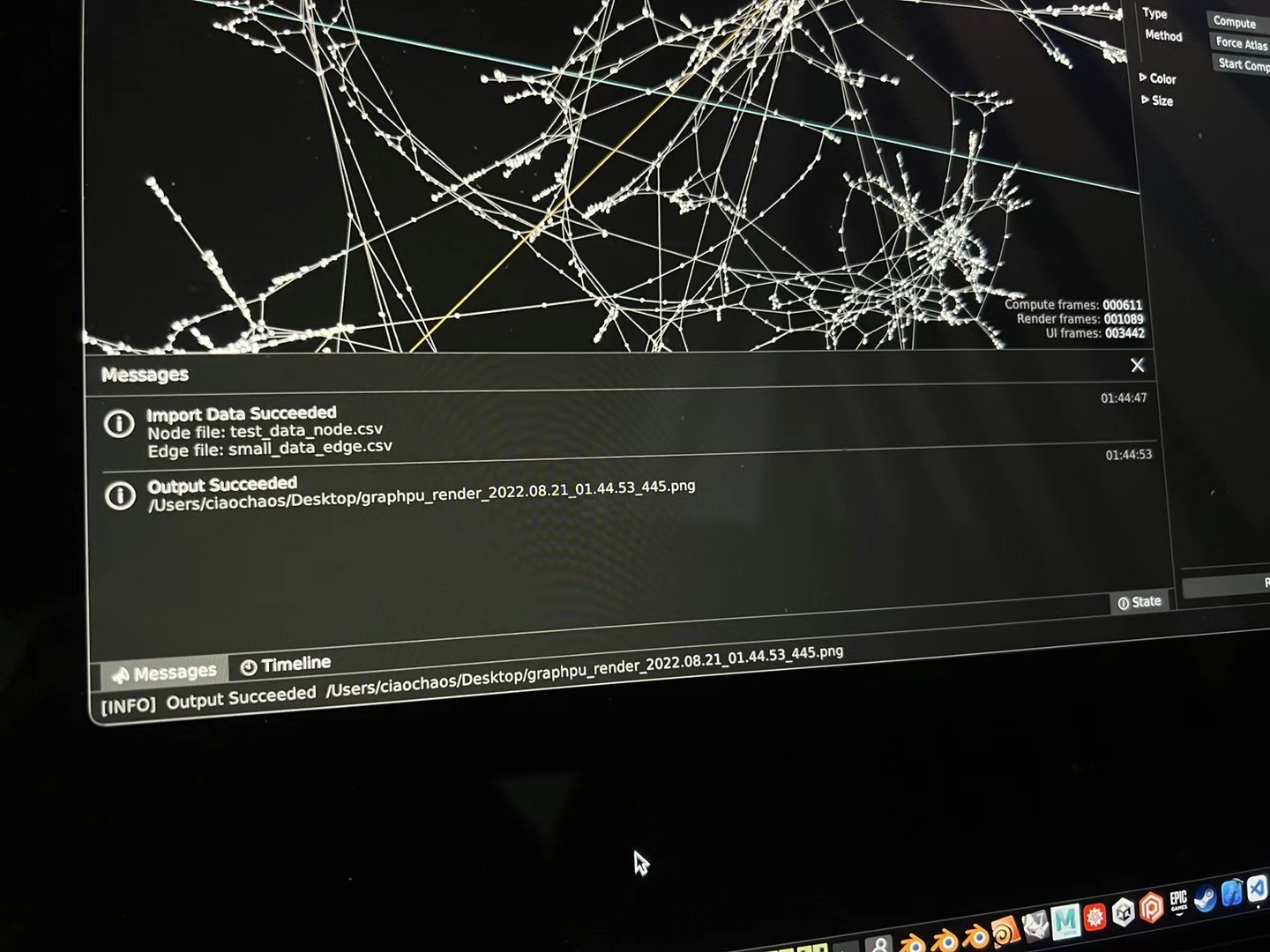

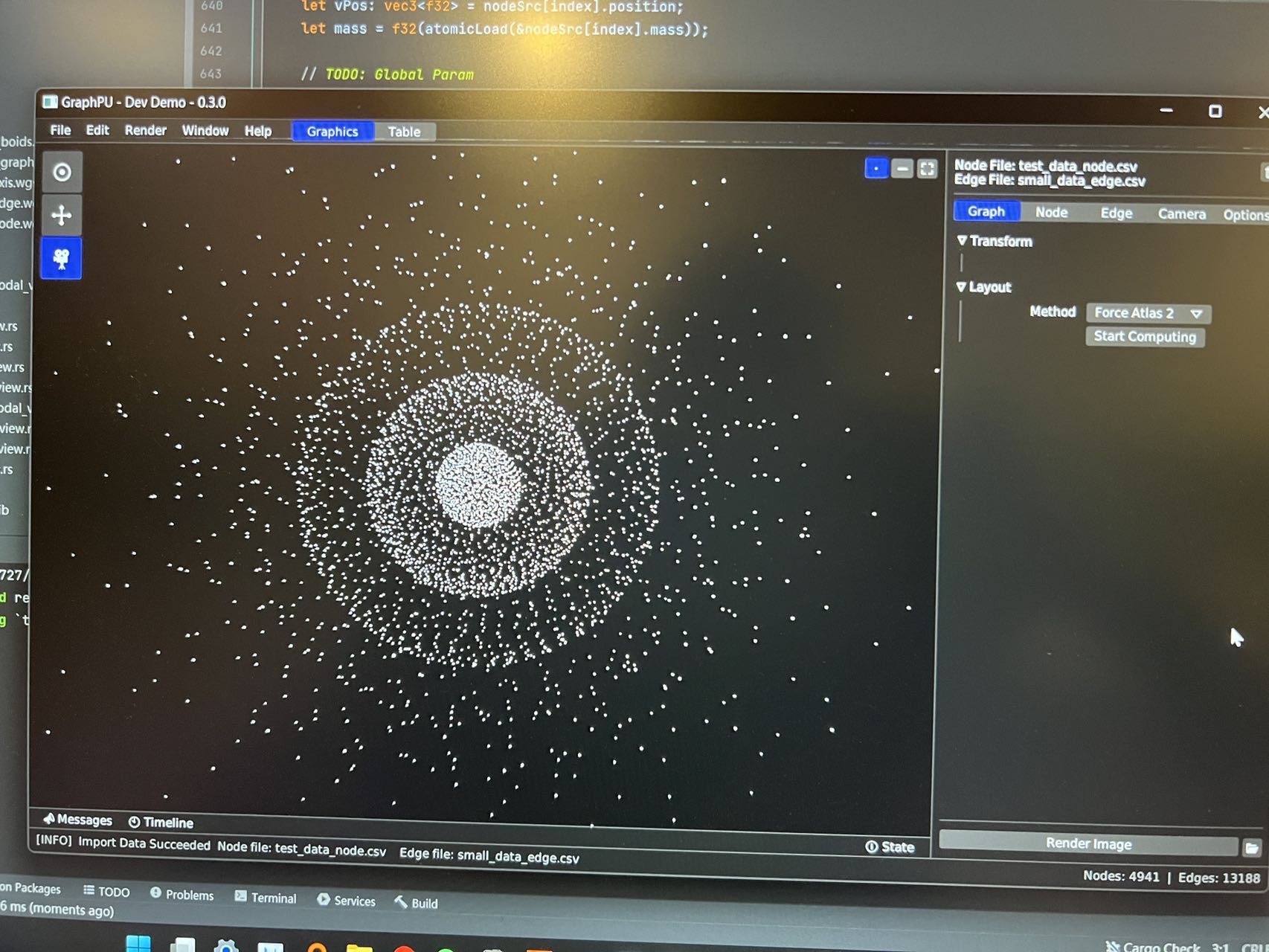

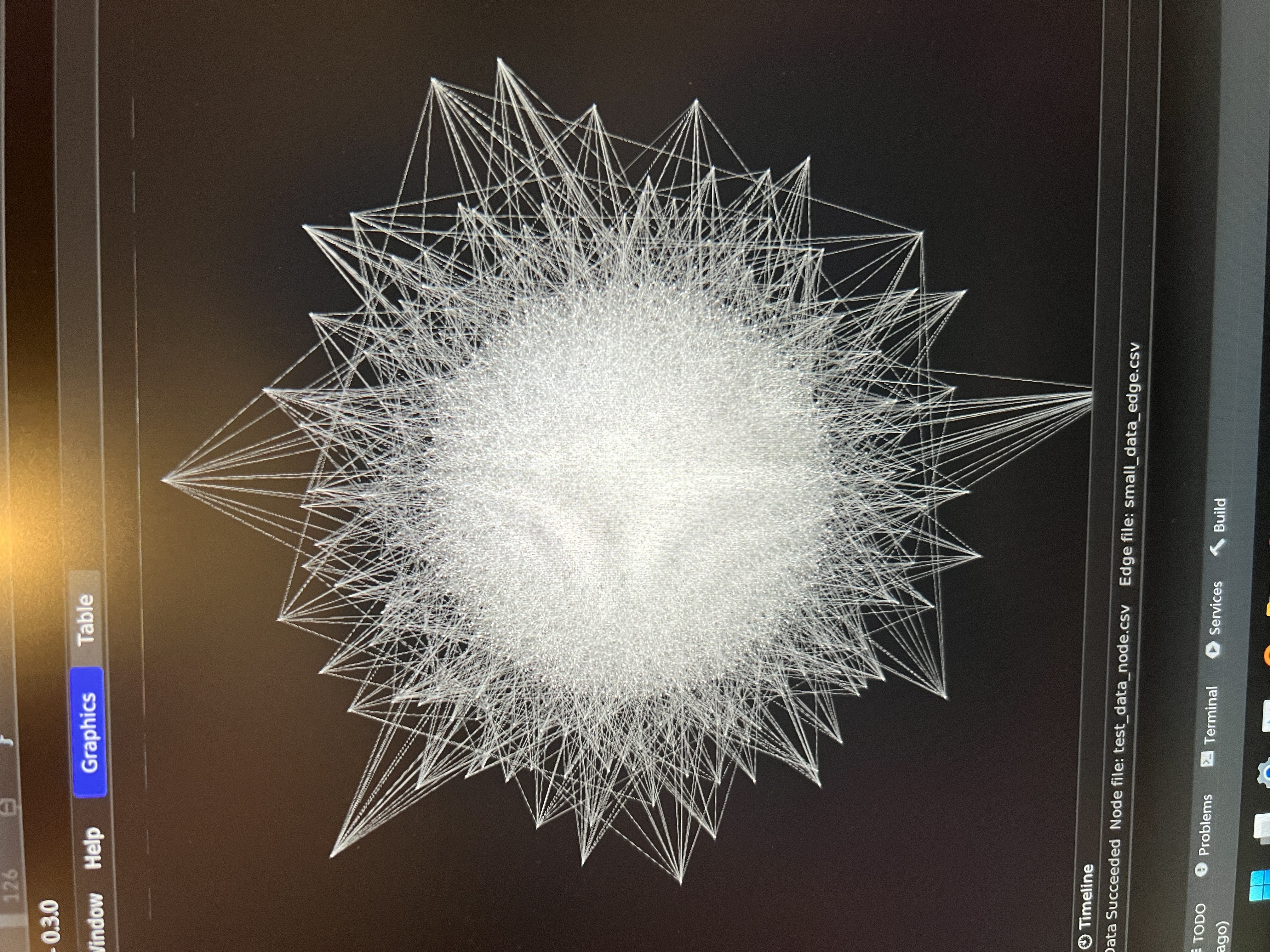

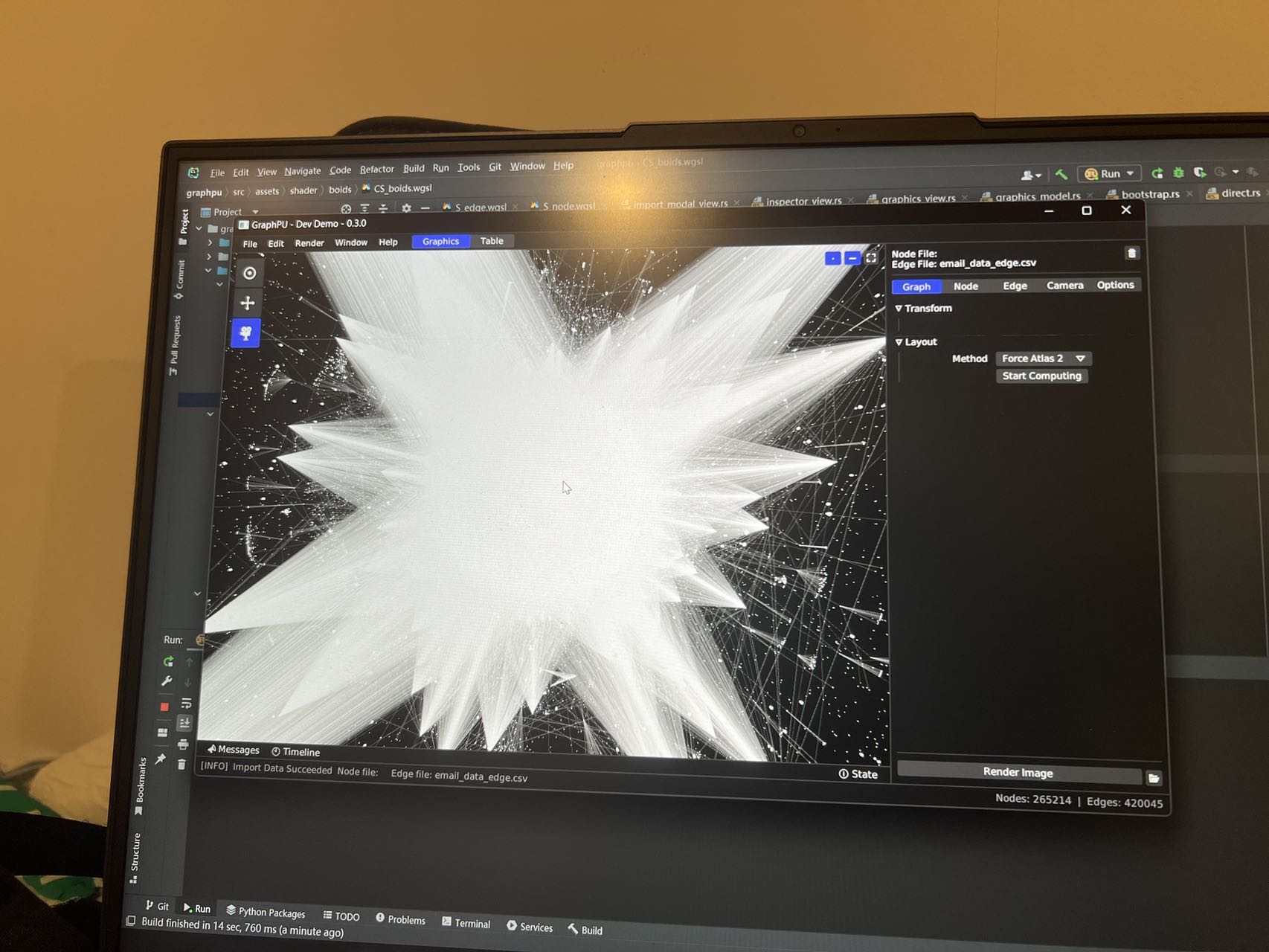

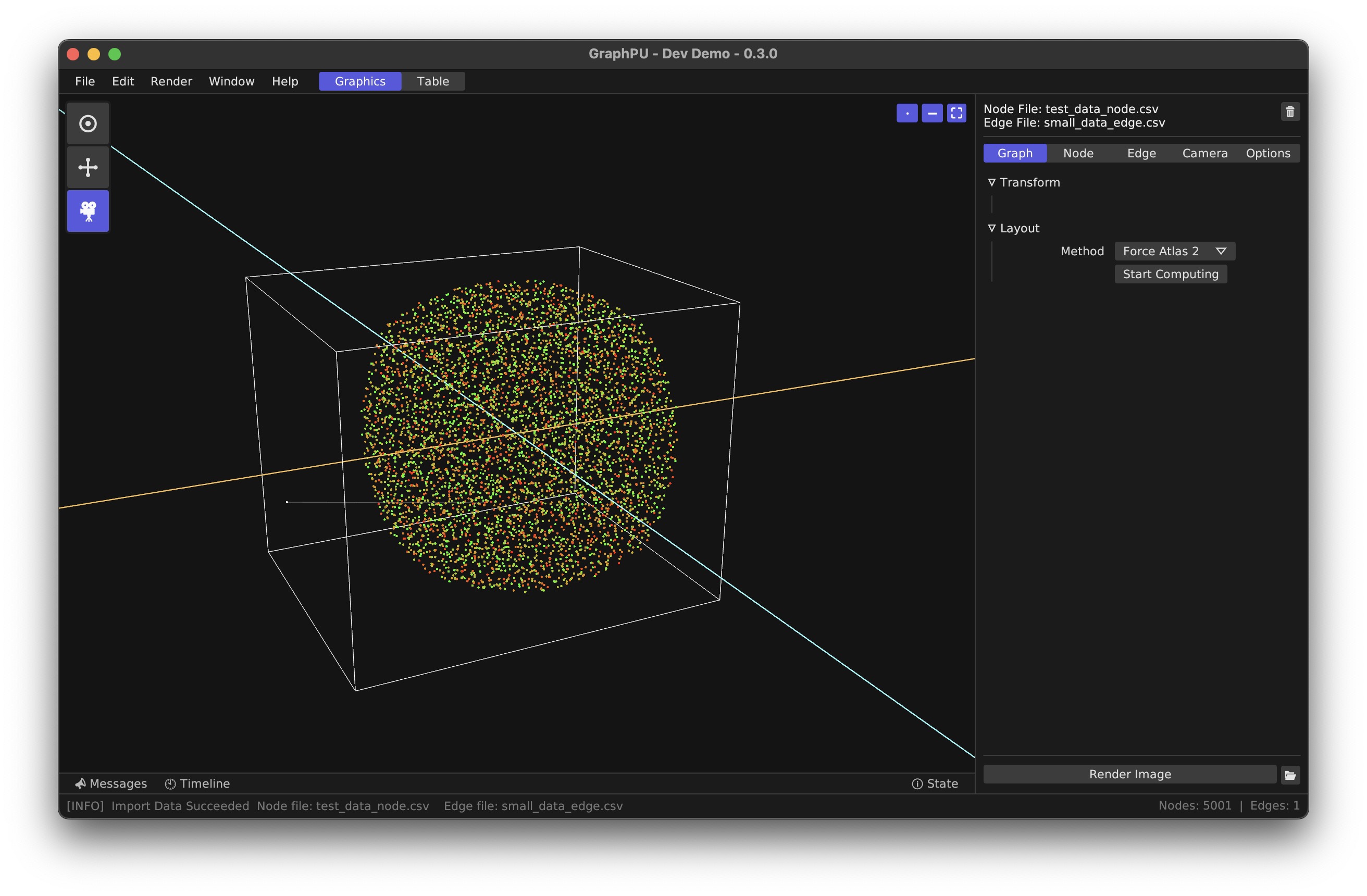

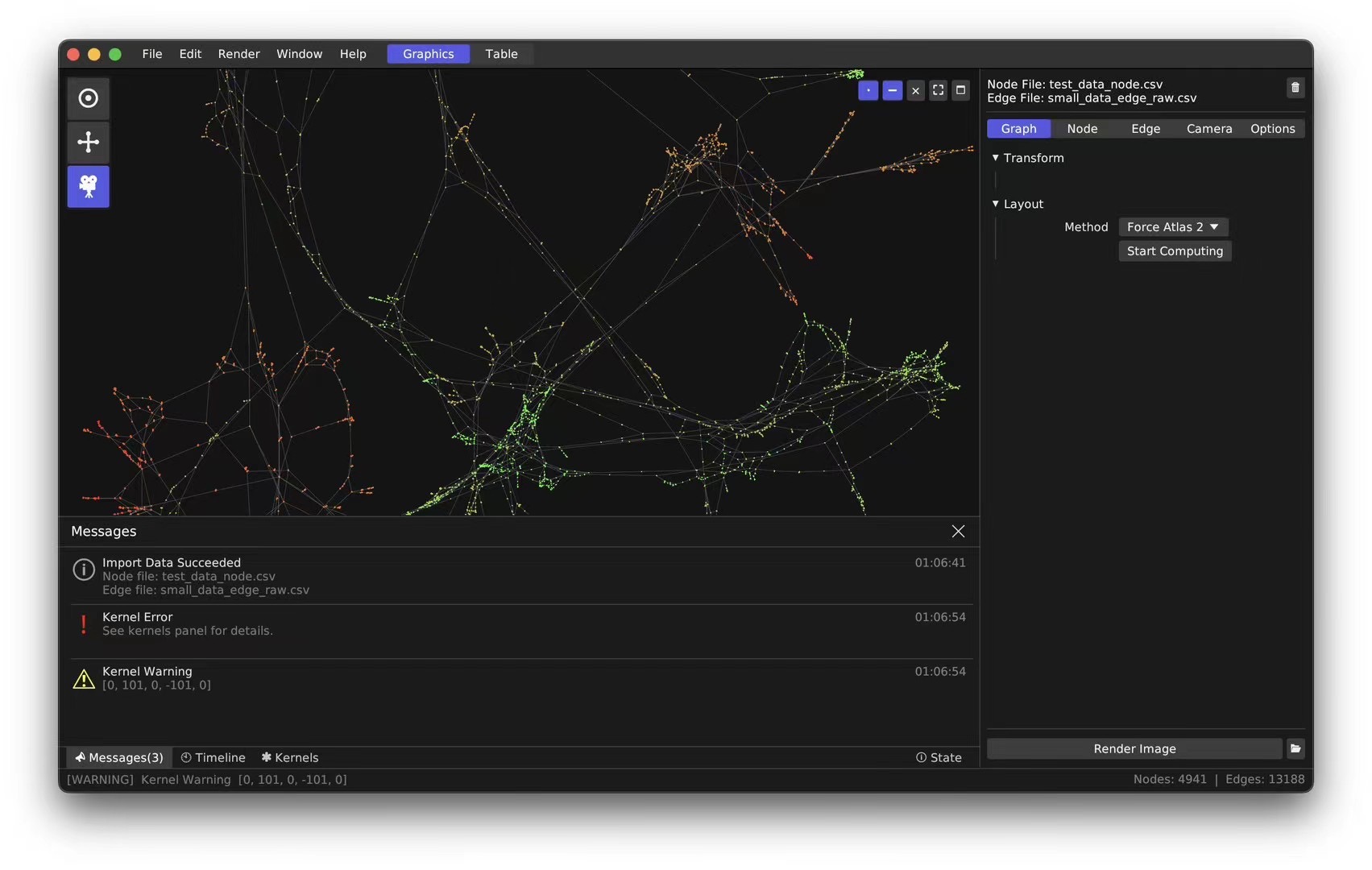

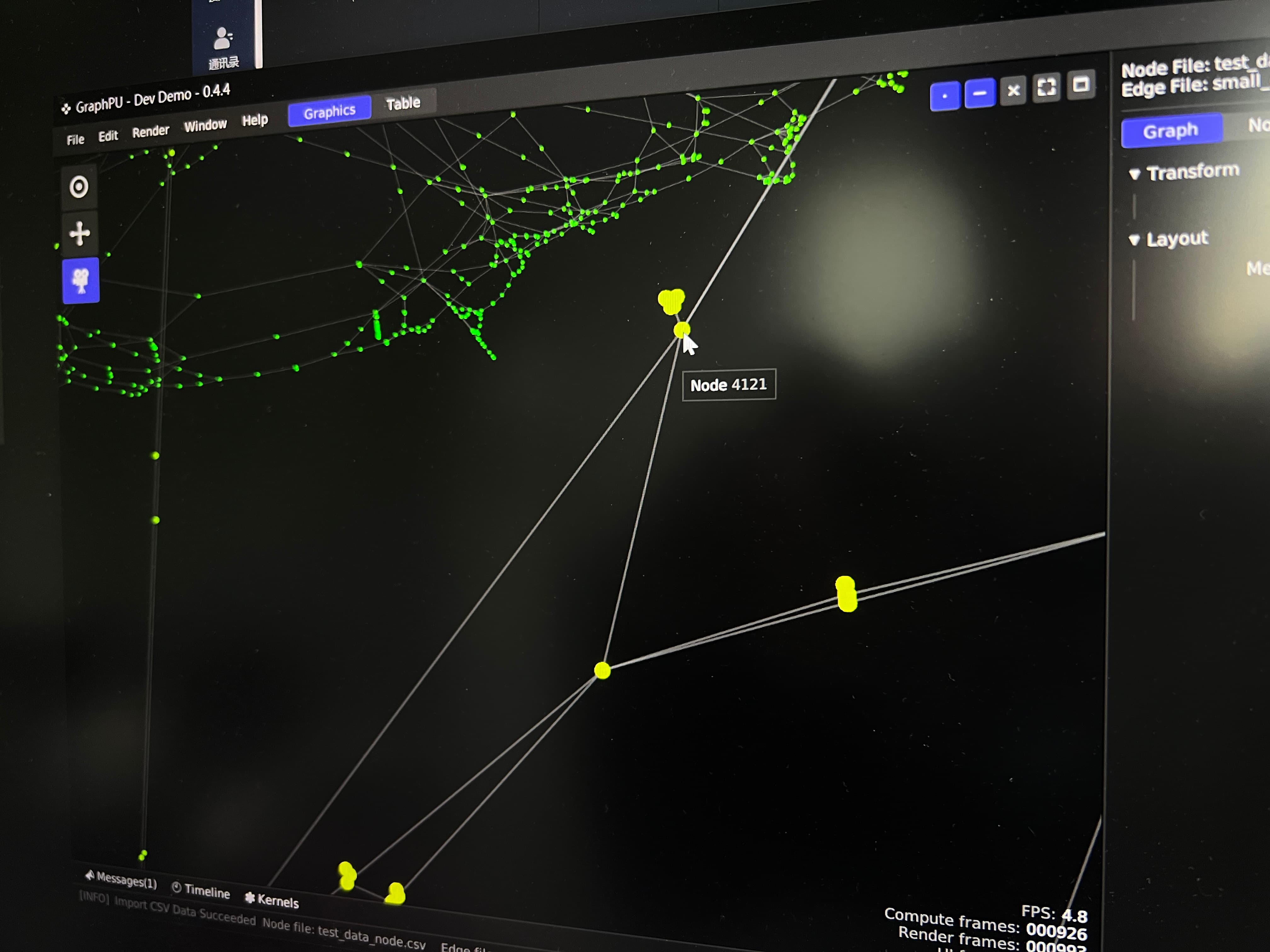

Eventually, I chose to build GraphPU in Rust. Here are some screenshots.

Technical Solutions

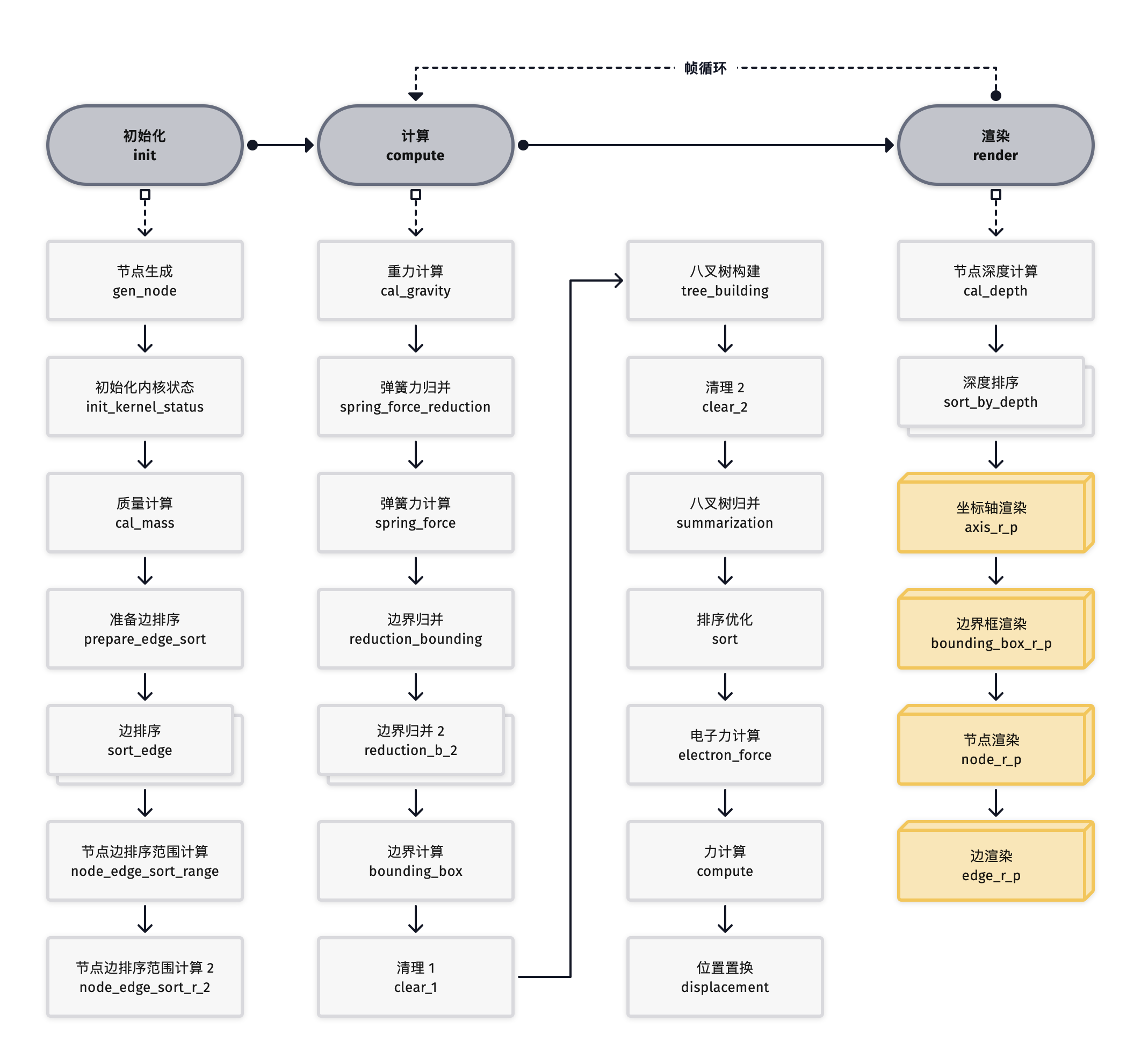

Behind the stunning visual effects lies a series of extreme optimizations. Without these technical solutions, GraphPU might only achieve 0.1 FPS instead of 60 FPS.

Floating-point Atomic Addition

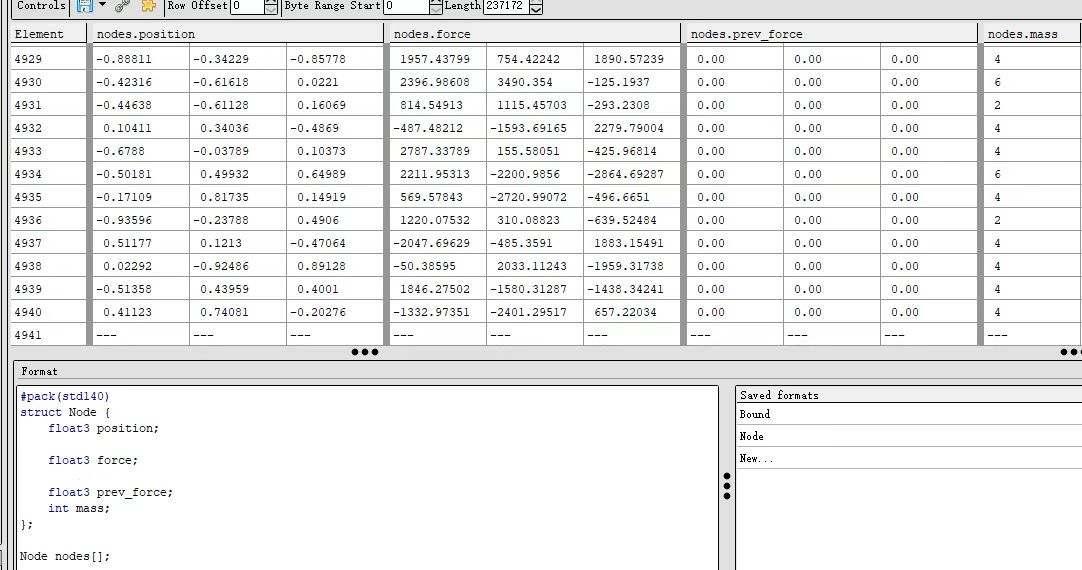

Graph visualization centers on calculating two forces: electron force (representing repulsion) and spring force (representing attraction). These forces interact to distribute the graph in a stable state in space.

To calculate spring forces in GPU (essentially a sparse matrix), we need to sum up the spring forces applied to each node. This involves a GPU characteristic where multiple GPU threads operating on the same data simultaneously can lead to race conditions. When multiple threads add spring forces to a node simultaneously, they might all read zero initially, resulting in only one spring force value being written.

To solve this, we need floating-point atomic addition for each node's x, y, and z dimensions. Atomic addition locks the value during addition, preventing other thread access until writing is complete. Most GPU programs provide atomic addition for integer types, but floating-point atomic addition requires software simulation.

In Unity, user-written HLSL shaders are sent to HLSLcc through ShaderLab and compiled into Metal, OpenGL, or other backend shader programs. In Rust wgpu, shader translation is handled by naga.

Due to WebGPU and WGSL standards being in draft state with frequent iterations, naga hadn't implemented atomicCompareExchangeWeak for WGSL's latest standard, which takes three parameters and returns a structure containing the original value and a boolean indicating change. Additionally, atomicExchange had bound check errors in Metal.

Therefore, we migrated the wgpu dependency from Rust crate to local and customized it. We wrote the atomicCompareExchangeWeak function using HLSL-like standards, ensuring its effectiveness in Metal and Vulkan.

This practice gave us a deeper understanding of shader compilers and valuable experience.

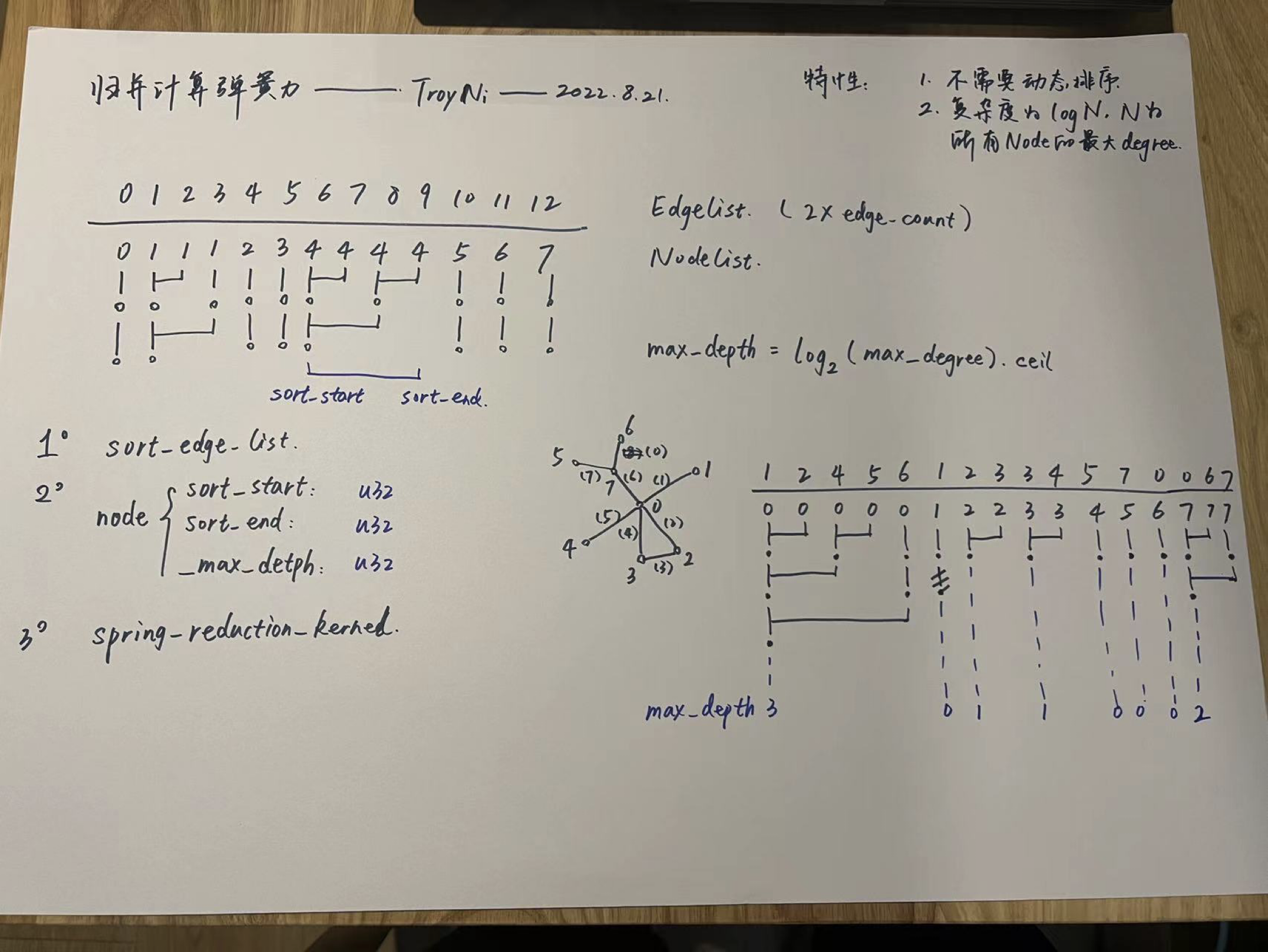

Spring Force Merge Acceleration

While floating-point atomic addition helps with spring force calculation, when the graph scale increases and many edges connect to a single node, that node's spring force calculation faces numerous serial dependencies, reaching O(n) complexity. Therefore, we invented a merge addition method specifically for spring forces, greatly accelerating the calculation.

Merge addition is a common GPU accumulation algorithm that accelerates addition through pairwise addition and gradual merging. In our algorithm, performing merge calculations separately for each node was a significant challenge, so we designed a sorting and alignment method to make this process incredibly efficient.

After optimization, if a node connects to 1 million edges, simulation speed can increase more than sixtyfold.

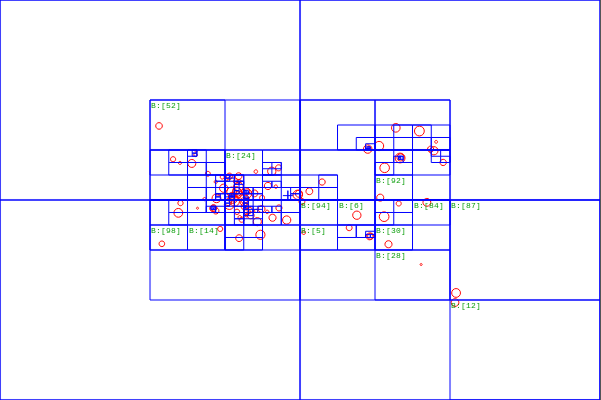

Parallel Version of Barnes-Hut Algorithm

The most crucial part of the graph visualization algorithm is calculating electron forces between nodes. With electrons interacting pairwise and electron forces being "omnipresent," the complexity is a solid O(n^2). The Barnes-Hut algorithm, by building a Barnes-Hut tree (similar to quadtree or octree), can merge distant nodes with minor influence in N-body simulation (electron force is essentially inverse gravitational force), approximating the force and greatly accelerating the simulation process, reducing complexity from O(n^2) to O(n log n).

In classic Barnes-Hut implementations, the algorithm is almost always CPU-based, mainly because Barnes-Hut tree construction uses recursion, while GPGPU has no recursion concept (except in CUDA-like environments) and can only simulate through loops and data-driven approaches.

Therefore, implementing a GPU-parallel Barnes-Hut algorithm is extremely complex. We wrote 1,000 lines of compute shader code for implementation, and these algorithms are extremely difficult to debug, encountering countless issues.

Bitonic Merge Sort for Particle Depth Sorting and Offscreen Rendering Raycast

Finally, we put significant effort into rendering. We solved particle depth sorting using bitonic merge sort, implemented mouse and particle raycast interaction using offscreen rendering, and completed our thesis exhibition setup with controller interaction.

Conclusion

This has been a long-term, extensive project, developed purely out of interest with almost no consideration for commercial conversion. I'm grateful for the collaborative efforts of ![]() CPunisher,

CPunisher, ![]() Shichen, and

Shichen, and ![]() KirinoMizu. The project is now open source at github.com/latentcat/graphpu, and you can download the Windows / macOS executable programs. Feel free to star and bookmark!

KirinoMizu. The project is now open source at github.com/latentcat/graphpu, and you can download the Windows / macOS executable programs. Feel free to star and bookmark!

Thank you!