Origins

In July 2024, I developed a kind of "AI fatigue." As an AI product manager, I couldn't muster any interest in AI-related matters. Perhaps experiencing the incredibly exciting industry cycle of Generative AI left me with PTSD, so I decided to do something with 0% AI content.

Audio

I wanted to revisit a field I'd always wanted to explore but never had time for: audio.

During my undergraduate years, influenced by many sound engineering students, I read an amazing book: Designing Sound. I was shocked when I first read it, fascinated by its systematic modeling of sound synthesis, such as how to synthesize the sound of an insect flying, which would be broken down into the sound of wings disturbing the air, the friction between wings and air, the sound at the muscle-wing connection point, and the sound of muscle movement. Every time I opened it, I wanted to learn more, but seeing Pure Data's printer menu-like interface (Professor Luo: this is practically a cultural heritage now), and the exploding AI model gradients beside it, well, I gave up.

Not only are the algorithmic principles difficult, but implementing audio algorithms is also extremely challenging. Audio processing algorithms are generally more complex than image processing, and you can't get anywhere without FFT. When looking to create an app that could synthesize and process audio, I searched the community and found only commercial software like Juce that could meet development needs, and it's in C++, making for a less-than-ideal development experience.

Just as I was struggling to get started, I discovered an interesting Rust library: FunDSP.

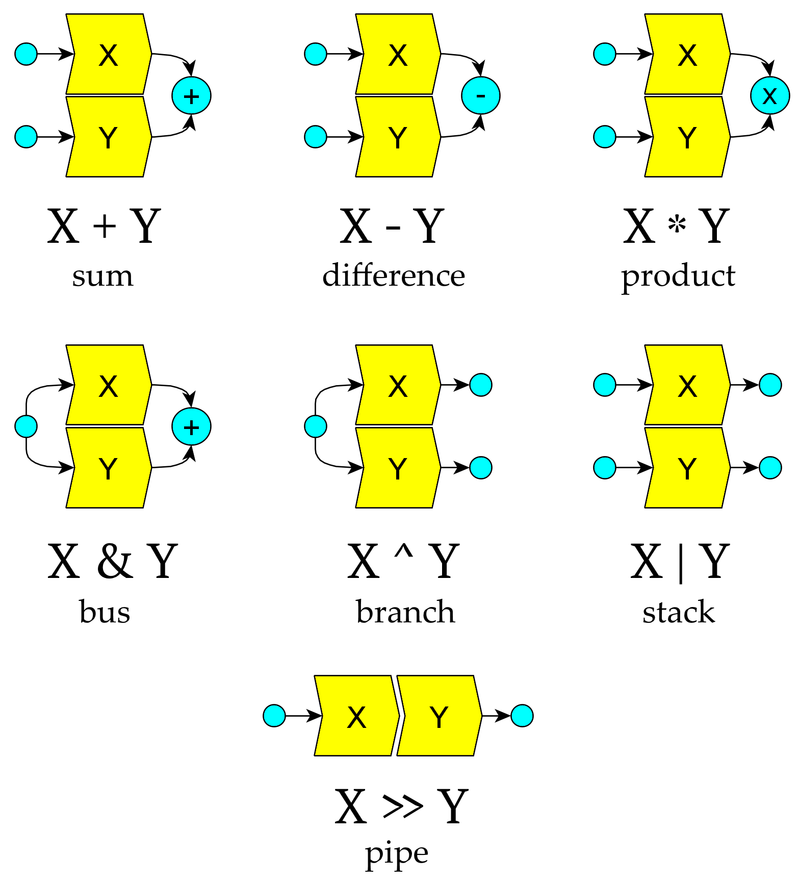

FunDSP is a Rust audio DSP library with an extremely interesting design that combines audio signal flow processing with Rust's operator overloading (implemented through traits). Audio signals can be manipulated with + - * /, all thanks to Rust's fascinating programming paradigm.

Following FunDSP's examples, I modified a simple Synthesizer that can have multiple notes with ADSR envelopes driving different waveforms like sine waves, then passing through filters, harmonics effects, and reverb to output the final audio.

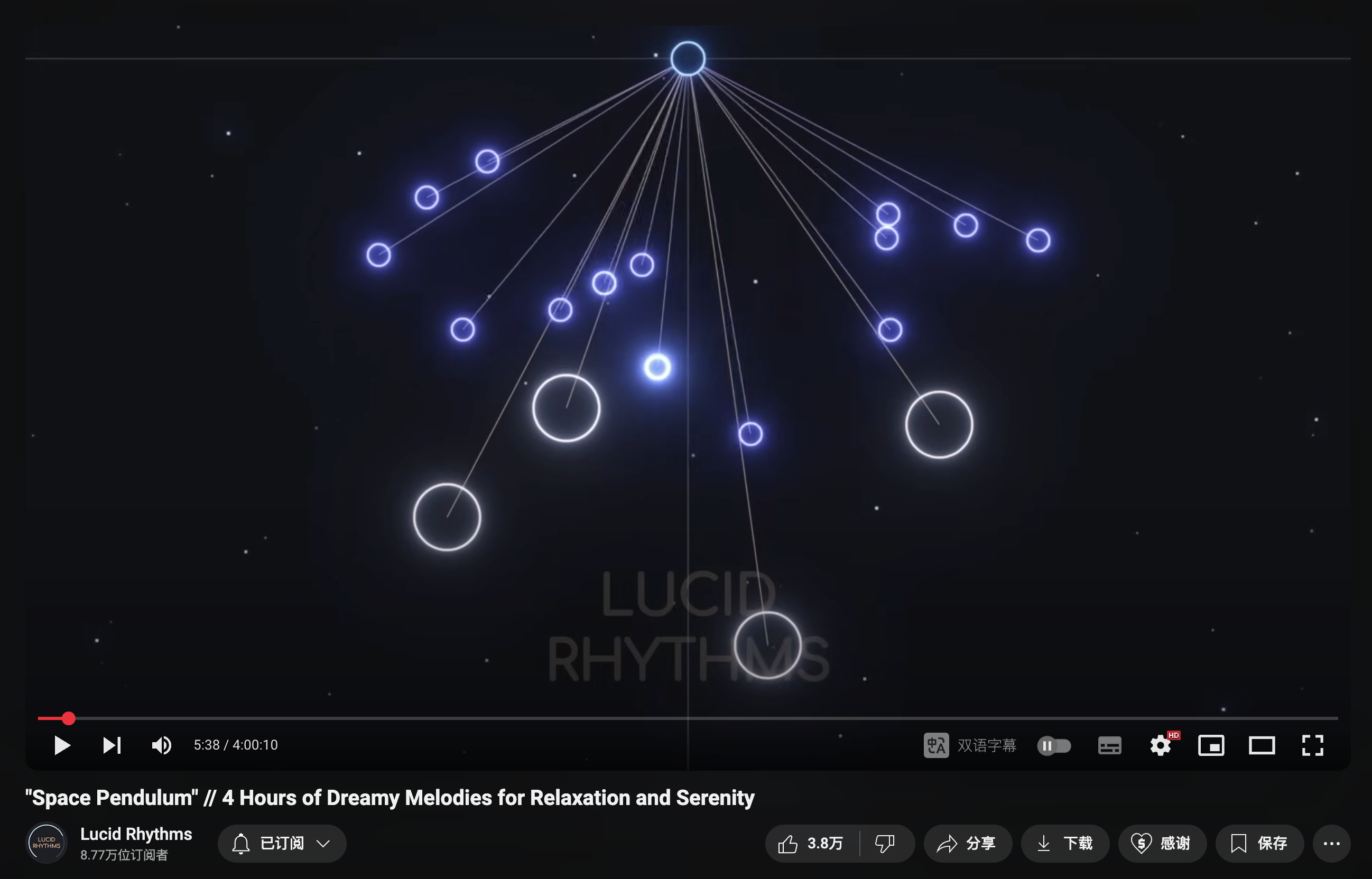

I used this sound generation mechanism to programmatically recreate the widely circulated poly rhythm from YouTube.

Rendering

After solving the audio development challenges, the next big task was rendering.

Initially, I wanted to use Godot as the rendering engine. When I first encountered it, I was very excited (probably from suffering with Unity and Unreal for too long): there was actually an engine that was so lightweight, had such a good development experience with its own scripting language + IDE, had increasingly more tutorials (like my favorite Unity blogger Brackeys), and had a well-maintained Rust extension by the community. It didn't take long to integrate the Rust sound algorithms, and it worked on all platforms.

But after completion, the problems suddenly emerged.

At that time, Godot didn't support direct compilation to Metal for macOS and iOS, everything had to go through Vulkan, which not only caused performance issues but also had incomplete feature support. For example, compute shaders (GPGPU), which are commonly used for visual effects development, were almost unusable in Godot, unlike Unity (Visual Effect Graph) and Unreal (Niagara) which have built-in GPU particle systems that can interact with project code.

Unity was still better after all, I was wrong about Unity Engine.

The second issue arose when I began to develop the concept of "visual albums," wanting to package these audio-visual interactive scenes into interactive "albums." On mobile, it should be like a music player app, so I tried to implement an iOS app UI in Godot, thinking it would be cool like those Web3D websites. But reality hit hard - even the latest iPhone couldn't handle running a real-time rendered main menu at 120Hz, plus rendering each scene in the Viewport.

All in Rust!

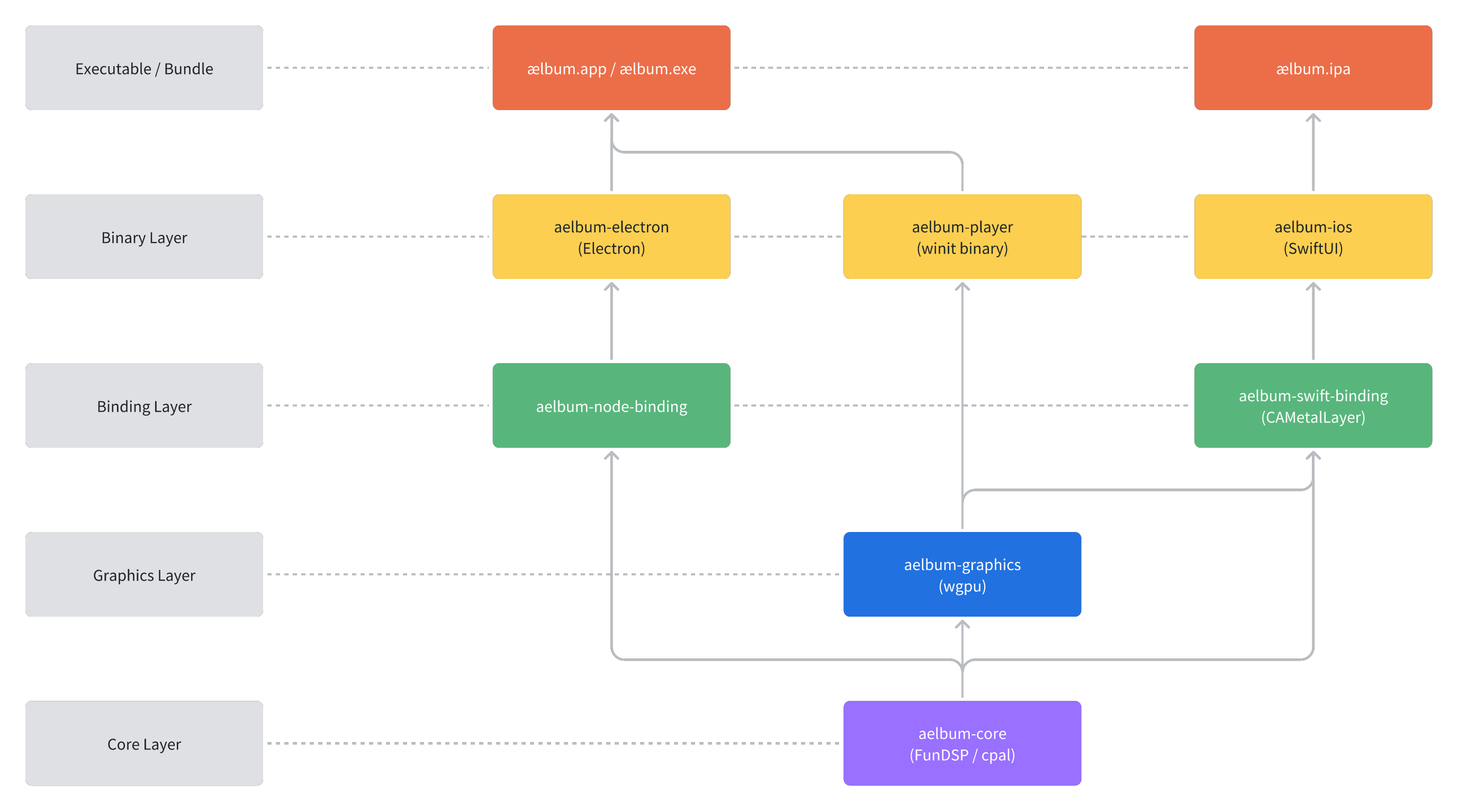

Finally, I abandoned Godot and chose a very low-level implementation approach, redesigning the architecture and completely restructuring the project:

- The rendering layer uses wgpu for development, with shaders written in WGSL. It supports almost all the drawing features I wanted, such as compute shaders and indirect draw, with only hardware ray tracing APIs not included.

- The iOS app is natively developed using SwiftUI, which is not only simple, performant, and lightweight but also allows access to Apple-exclusive features like widgets, background music playback, and haptic feedback.

- The Windows/macOS version uses Electron + react.js as a launcher to start the Rust-written player program for playback, which has some hidden benefits that I'll mention later.

wgpu

There were many reasons for choosing wgpu at the lowest level.

wgpu is a cross-platform rendering library that uses Rust as the development language and WGSL as the shading language, ultimately able to compile and run graphics programs on iOS (Metal), Android (Vulkan), macOS (Metal), and Windows (Vulkan/DirectX). Its cross-platform capabilities attracted me, and combined with Rust's excellent toolchain, wgpu became very suitable for independent development.

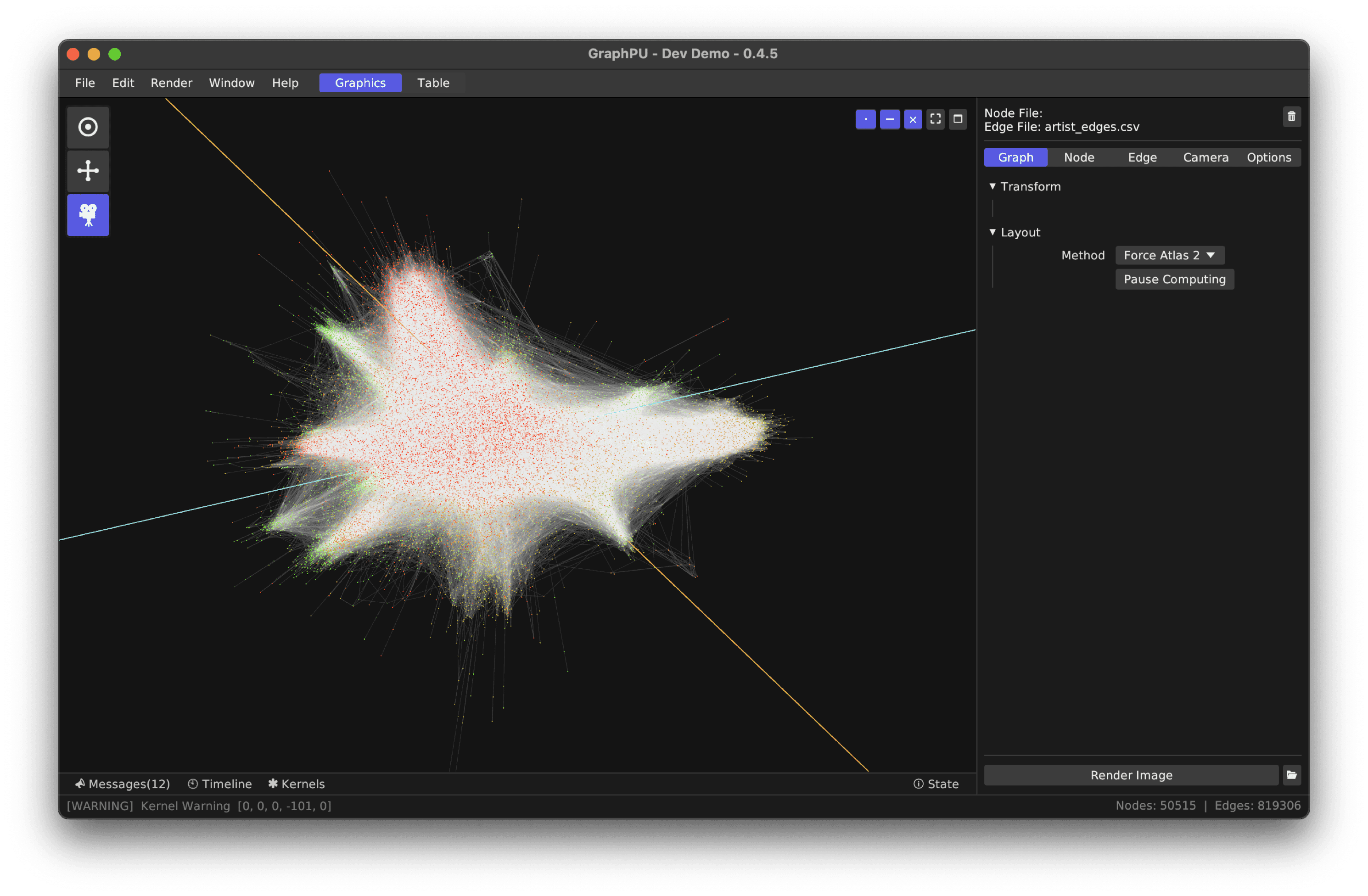

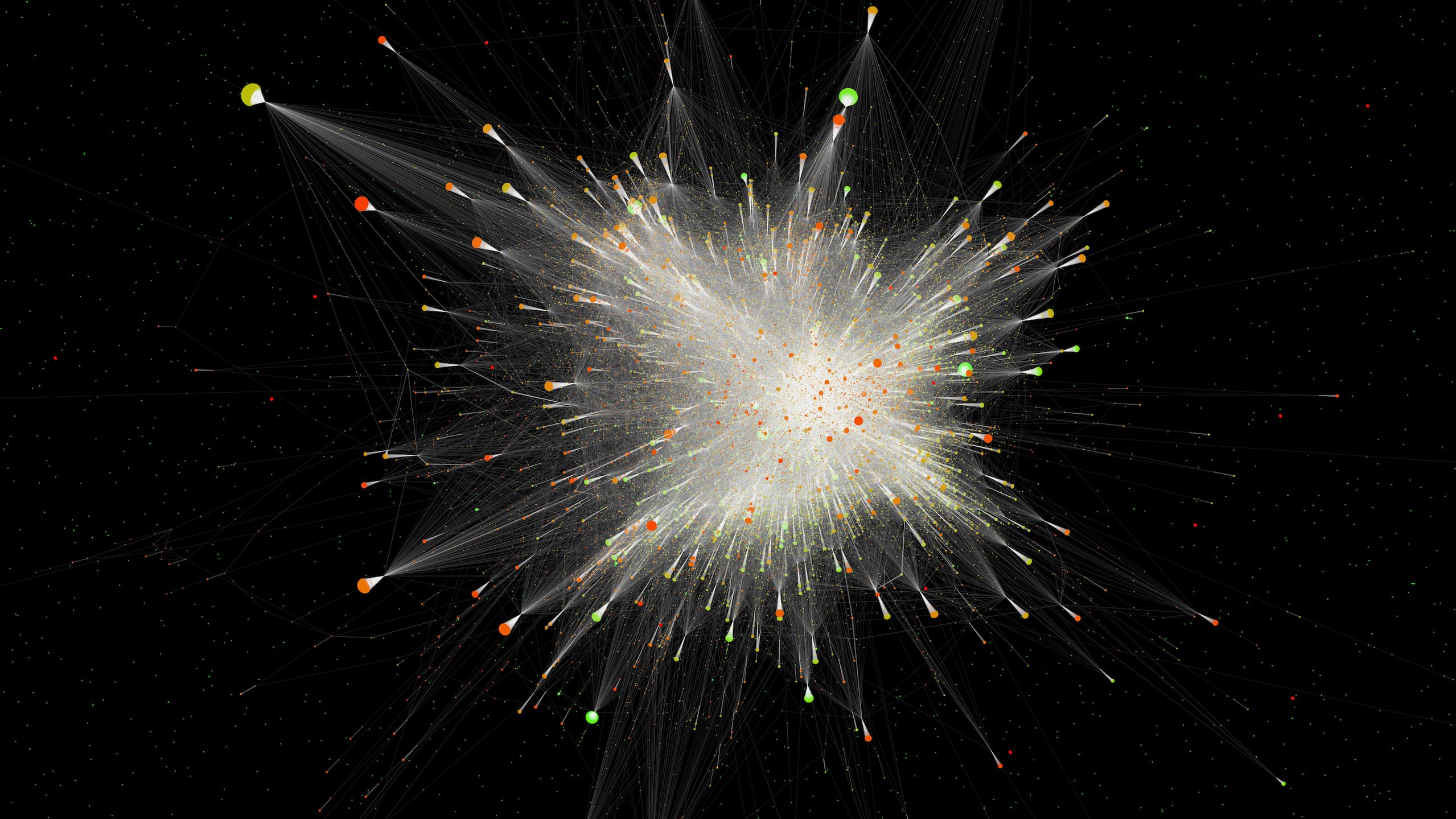

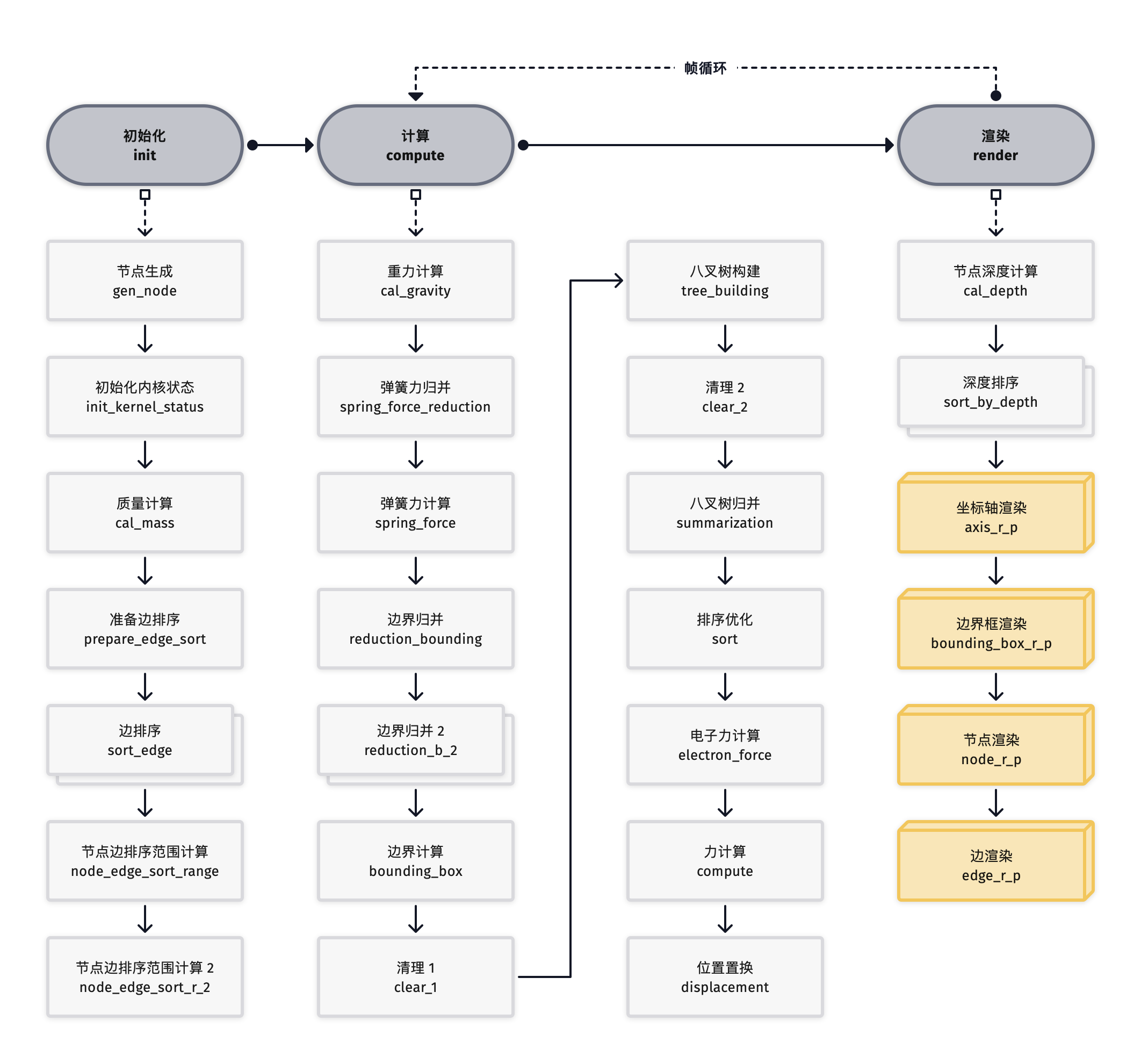

Two years ago, I completed my undergraduate thesis using wgpu: a large-scale 3D graph visualization application called GraphPU. I manually wrote 18 GPGPU kernels to accelerate spring-electrical force distribution simulation in graph visualization. To enable compute shader recursion on macOS (implementing a parallel version of the Barnes-Hut algorithm), we even modified wgpu's shader compiler naga. During my internship at IEG, I also learned from my intern leader how to use RenderDoc to debug GPU programs, which gave me more confidence in handling this rendering library with unstable APIs but very future-oriented features (WebGPU might become more popular in the future, and the game engine bevy based on wgpu is also gradually improving).

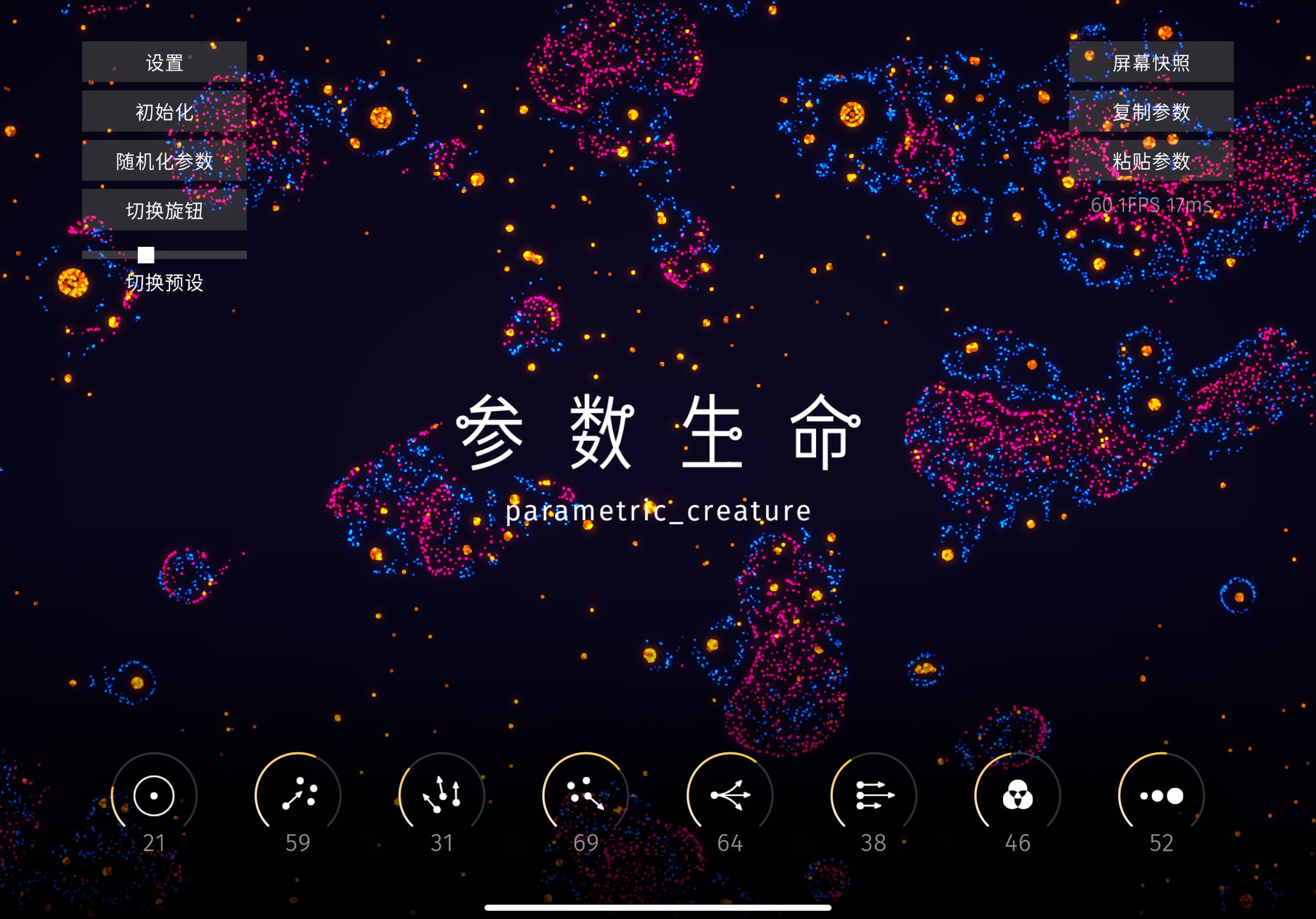

To test if wgpu could meet my needs (doing what Godot couldn't), I completely recreated my previous new media artwork in wgpu: Parametric Life, a complex system composed of tens of thousands of interacting particles. Without optimization, such a particle system would have a computational complexity of O(N2), which would be difficult to achieve with VFX Graph and Niagara. But with Claude's help, I completed the migration of simulation, rendering code, and shader code in almost one day, and because WGSL has better support for structs, the code became clearer and more readable.

To meet future development needs for ordinary particle systems in wgpu, I also recreated their GPU particle systems by referencing Unity (by examining compiled Shaders and library files from Shader Graph and Visual Effect Graph) and open-source engines.

iOS App

After having the wgpu rendering program, how does ælbum solve the problem of displaying rendered images in iOS native UI? This brings us to the work completed by Jinlei Li. He provided vivid documentation and open-source example programs explaining how to embed wgpu in iOS apps, and his app "Zi Xi" is available for download on the App Store.

Years ago, a colleague and I had completed a Unity embedding in iOS project. Compared to that, Unity was very heavy, had a mandatory splash screen, and handled screen resizing poorly. Using it just as a rendering engine was quite unnecessary. wgpu perfectly solved all these issues - a basic wgpu implementation might only add 10MB when packaged into iOS, starts very quickly (almost before the app opening animation completes), and begins rendering. With more active control over iOS's CADisplayLink, it's better at adjusting rendering start/stop, frame rate, and resolution, and also has excellent support for HDR and off-screen rendering.

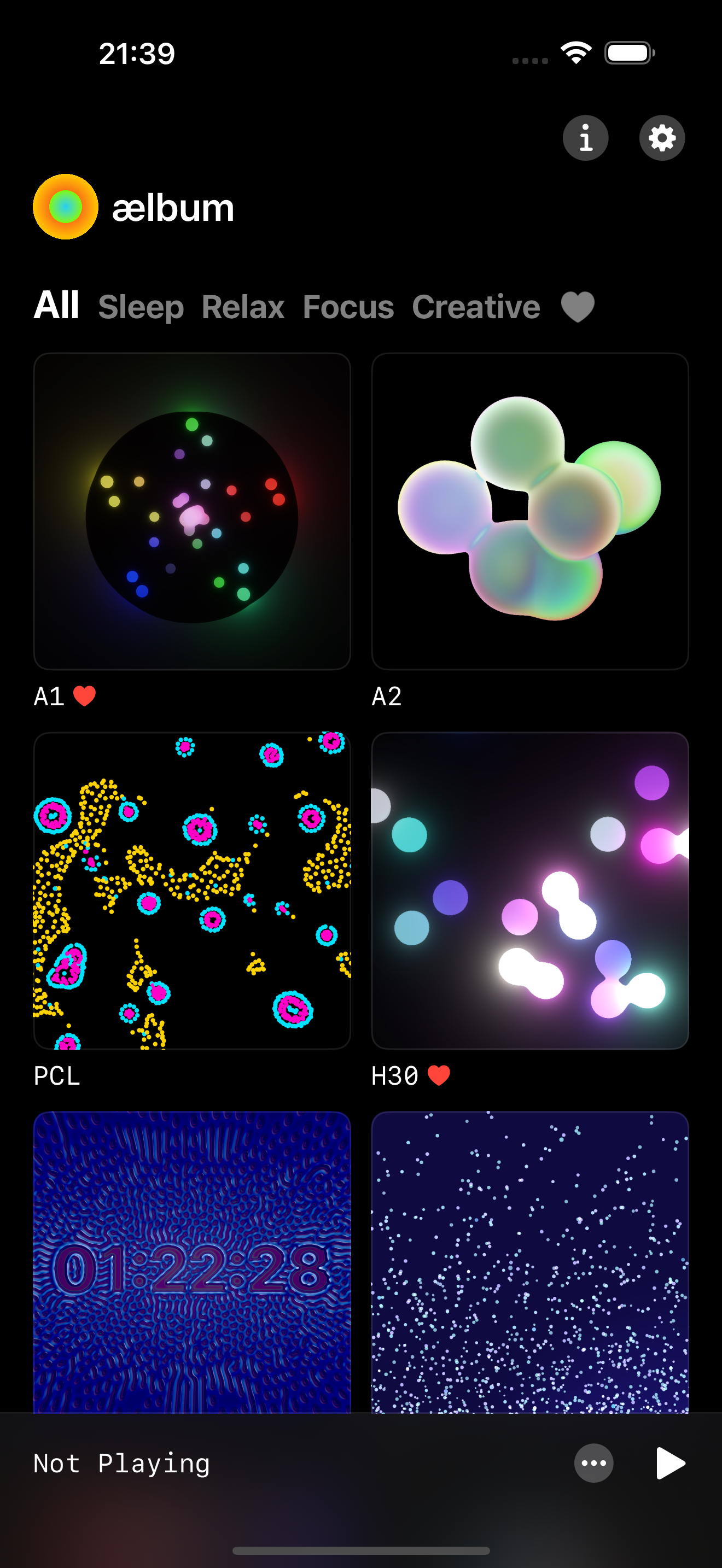

So I created a player app that feels similar to Apple Music. Albums are presented in the native UI, and when the player at the bottom is collapsed, it renders each album's visual content with Gaussian blur. When expanded, it becomes a full-screen browsing and interactive experience.

Because the outer layer is iOS native, we can call any Apple API and pass messages through channels to the rendering program. For example, in the Parametric Life album, the parameter UI no longer needs to be rendered in the rendering program but can use SwiftUI to draw knobs, with very nuanced haptic feedback when knobs are pressed, released, or values change, creating quite an interesting experience. Besides this, we can also create interactions related to the microphone, gyroscope, and Apple Pencil.

At this point, we could finally achieve our initial goal: creating a visual album player. The last step was to support Apple's background mode and make album covers appear in the Control Center. For this, we even wrote a special email to Apple explaining our efforts in real-time audio generation in the background, asking them to grant us permission and not kill the background process.

Desktop App

While developing the iOS app, we also packaged a desktop app using Electron + Rsbuild + React.js.

The biggest surprise in the desktop app was that we successfully hacked Windows and macOS using the windows/cocoa API in Rust, allowing our rendering program to be set as a dynamic desktop wallpaper on both platforms. macOS provides a window hierarchy key for desktop wallpapers, while Windows requires sending a mysterious code: 0x052C (it's really mysterious, couldn't find any documentation about it).

After setting it as the desktop wallpaper, you can code while enjoying the unique atmosphere brought by dynamic wallpaper and audio (just kidding, haven't done this part yet, haha).

Release, Launch, and Future Plans

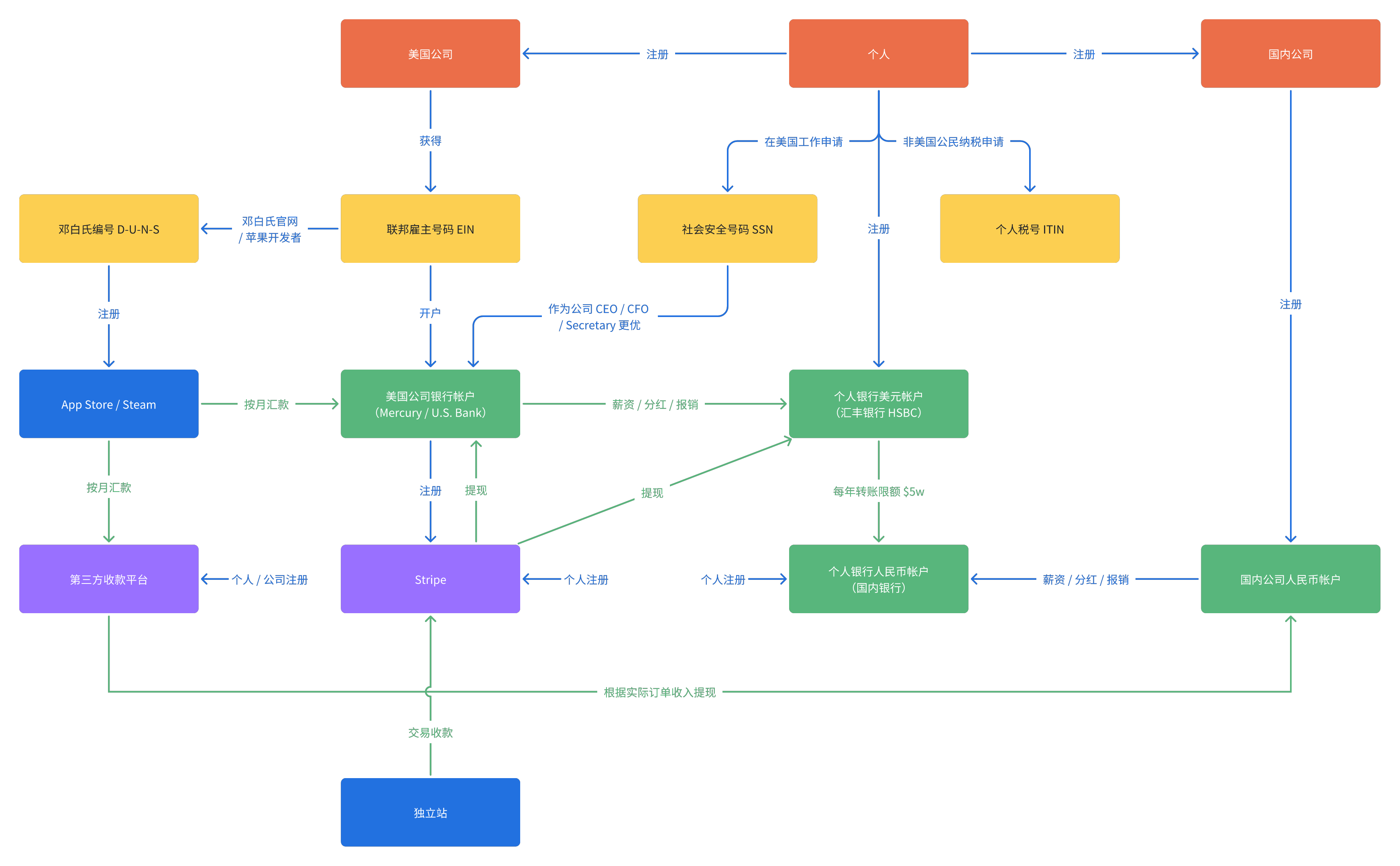

To release this app, I developed a new payment architecture using an offshore U.S. company and U.S. corporate bank account for payments, rather than using payment tools like Pingpong. Here's an architecture setup diagram for reference!

After setup, ælbum successfully launched on both the App Store and Steam. Welcome to download!

- ælbum Official Website: aelbum.com

- ælbum iOS: apple.co/4allfVP

- ælbum Steam (PC/Mac): store.steampowered.com/app/3477960/ælbum

Moving forward, we will update the ælbum project periodically. I want to add more eye-catching rendering visuals and design more interesting visual albums under four themes: sleep aid, stress relief, focus maintenance, and creativity stimulation. Stay tuned!

Finally, I'm very grateful to my partner CPunisher who wrote every project with me along the way. Without our collaboration, I couldn't have completed Rust in iOS, Rust in Godot, and Rust + Electron alone (this young genius is also a core team member of the Rust-based frontend compiler SWC and a frontend toolchain open-source contributor). I look forward to working on more interesting projects together in the future!

Equally thanks to @byh, @qyy, and @jxy for sharing many audio creative ideas!

That's all!